Orginal impl

Realised the original implementation isn’t here. Its pretty hairy at the moment, but it is essentially an auto-promise wrapper. It works with all classes (except name args don’t work, which was why I started this thread) and just calls wait if needed. Its definitely a work in progress.

Impl

ObjectPreCaller {

var <>impl_underlyingObject;

var <>impl_preFunc;

// DO NOT WAIT ON THIS METHOD AS THE INTERPRETER USES IT TO PRINT

asString { |limit| ^impl_underlyingObject.asString(limit) }

class { ^impl_underlyingObject.class() }

dump { impl_preFunc.(); ^impl_underlyingObject.dump() }

post { impl_preFunc.(); ^impl_underlyingObject.post()}

postln { impl_preFunc.(); ^impl_underlyingObject.postln()}

postc { impl_preFunc.(); ^impl_underlyingObject.postc()}

postcln { impl_preFunc.(); ^impl_underlyingObject.postcln()}

postcs { impl_preFunc.(); ^impl_underlyingObject.postcs()}

totalFree { impl_preFunc.(); ^impl_underlyingObject.totalFree() }

largestFreeBlock { impl_preFunc.(); ^impl_underlyingObject.largestFreeBlock() }

gcDumpGrey { impl_preFunc.(); ^impl_underlyingObject.gcDumpGrey() }

gcDumpSet { impl_preFunc.(); ^impl_underlyingObject.gcDumpSet() }

gcInfo { impl_preFunc.(); ^impl_underlyingObject.gcInfo() }

gcSanity { impl_preFunc.(); ^impl_underlyingObject.gcSanity() }

canCallOS { impl_preFunc.(); ^impl_underlyingObject.canCallOS() }

size { impl_preFunc.(); ^impl_underlyingObject.size()}

indexedSize { impl_preFunc.(); ^impl_underlyingObject.indexedSize()}

flatSize { impl_preFunc.(); ^impl_underlyingObject.flatSize()}

functionPerformList { impl_preFunc.(); ^impl_underlyingObject.functionPerformList() }

copy { impl_preFunc.(); ^impl_underlyingObject.copy()}

contentsCopy { impl_preFunc.(); ^impl_underlyingObject.contentsCopy()}

shallowCopy { impl_preFunc.(); ^impl_underlyingObject.shallowCopy()}

copyImmutable { impl_preFunc.(); ^impl_underlyingObject.copyImmutable() }

deepCopy { impl_preFunc.(); ^impl_underlyingObject.deepCopy() }

poll { impl_preFunc.(); ^impl_underlyingObject.poll()}

value { impl_preFunc.(); ^impl_underlyingObject.value()}

valueArray { impl_preFunc.(); ^impl_underlyingObject.valueArray()}

valueEnvir { impl_preFunc.(); ^impl_underlyingObject.valueEnvir()}

valueArrayEnvir { impl_preFunc.(); ^impl_underlyingObject.valueArrayEnvir()}

basicHash { impl_preFunc.(); ^impl_underlyingObject.basicHash()}

hash { impl_preFunc.(); ^impl_underlyingObject.hash()}

identityHash { impl_preFunc.(); ^impl_underlyingObject.identityHash()}

next { impl_preFunc.(); ^impl_underlyingObject.next()}

reset { impl_preFunc.(); ^impl_underlyingObject.reset()}

iter { impl_preFunc.(); ^impl_underlyingObject.iter()}

stop { impl_preFunc.(); ^impl_underlyingObject.stop()}

free { impl_preFunc.(); ^impl_underlyingObject.free()}

clear { impl_preFunc.(); ^impl_underlyingObject.clear()}

removedFromScheduler { impl_preFunc.(); ^impl_underlyingObject.removedFromScheduler()}

isPlaying { impl_preFunc.(); ^impl_underlyingObject.isPlaying()}

embedInStream { impl_preFunc.(); ^impl_underlyingObject.embedInStream()}

loop { impl_preFunc.(); ^impl_underlyingObject.loop()}

asStream { impl_preFunc.(); ^impl_underlyingObject.asStream()}

eventAt { impl_preFunc.(); ^impl_underlyingObject.eventAt()}

finishEvent { impl_preFunc.(); ^impl_underlyingObject.finishEvent()}

atLimit { impl_preFunc.(); ^impl_underlyingObject.atLimit()}

isRest { impl_preFunc.(); ^impl_underlyingObject.isRest()}

threadPlayer { impl_preFunc.(); ^impl_underlyingObject.threadPlayer()}

threadPlayer_ { impl_preFunc.(); ^impl_underlyingObject.threadPlayer_()}

isNil { impl_preFunc.(); ^impl_underlyingObject.isNil()}

notNil { impl_preFunc.(); ^impl_underlyingObject.notNil()}

isNumber { impl_preFunc.(); ^impl_underlyingObject.isNumber()}

isInteger { impl_preFunc.(); ^impl_underlyingObject.isInteger()}

isFloat { impl_preFunc.(); ^impl_underlyingObject.isFloat()}

isSequenceableCollection { impl_preFunc.(); ^impl_underlyingObject.isSequenceableCollection()}

isCollection { impl_preFunc.(); ^impl_underlyingObject.isCollection()}

isArray { impl_preFunc.(); ^impl_underlyingObject.isArray()}

isString { impl_preFunc.(); ^impl_underlyingObject.isString()}

containsSeqColl { impl_preFunc.(); ^impl_underlyingObject.containsSeqColl()}

isValidUGenInput { impl_preFunc.(); ^impl_underlyingObject.isValidUGenInput()}

isException { impl_preFunc.(); ^impl_underlyingObject.isException()}

isFunction { impl_preFunc.(); ^impl_underlyingObject.isFunction()}

trueAt { impl_preFunc.(); ^impl_underlyingObject.trueAt()}

mutable { impl_preFunc.(); ^impl_underlyingObject.mutable()}

frozen { impl_preFunc.(); ^impl_underlyingObject.frozen()}

halt { impl_preFunc.(); ^impl_underlyingObject.halt() }

prHalt { impl_preFunc.(); ^impl_underlyingObject.prHalt() }

primitiveFailed { impl_preFunc.(); ^impl_underlyingObject.primitiveFailed() }

reportError { impl_preFunc.(); ^impl_underlyingObject.reportError() }

mustBeBoolean { impl_preFunc.(); ^impl_underlyingObject.mustBeBoolean()}

notYetImplemented { impl_preFunc.(); ^impl_underlyingObject.notYetImplemented()}

dumpBackTrace { impl_preFunc.(); ^impl_underlyingObject.dumpBackTrace() }

getBackTrace { impl_preFunc.(); ^impl_underlyingObject.getBackTrace() }

throw { impl_preFunc.(); ^impl_underlyingObject.throw() }

species { impl_preFunc.(); ^impl_underlyingObject.species()}

asCollection { impl_preFunc.(); ^impl_underlyingObject.asCollection()}

asSymbol { impl_preFunc.(); ^impl_underlyingObject.asSymbol()}

asCompileString { impl_preFunc.(); ^impl_underlyingObject.asCompileString() }

cs { impl_preFunc.(); ^impl_underlyingObject.cs()}

storeArgs { impl_preFunc.(); ^impl_underlyingObject.storeArgs()}

dereference { impl_preFunc.(); ^impl_underlyingObject.dereference()}

reference { impl_preFunc.(); ^impl_underlyingObject.reference()}

asRef { impl_preFunc.(); ^impl_underlyingObject.asRef()}

dereferenceOperand { impl_preFunc.(); ^impl_underlyingObject.dereferenceOperand()}

asArray { impl_preFunc.(); ^impl_underlyingObject.asArray()}

asSequenceableCollection { impl_preFunc.(); ^impl_underlyingObject.asSequenceableCollection()}

rank { impl_preFunc.(); ^impl_underlyingObject.rank()}

slice { impl_preFunc.(); ^impl_underlyingObject.slice()}

shape { impl_preFunc.(); ^impl_underlyingObject.shape()}

unbubble { impl_preFunc.(); ^impl_underlyingObject.unbubble()}

yield { impl_preFunc.(); ^impl_underlyingObject.yield() }

alwaysYield { impl_preFunc.(); ^impl_underlyingObject.alwaysYield() }

dependants { impl_preFunc.(); ^impl_underlyingObject.dependants() }

release { impl_preFunc.(); ^impl_underlyingObject.release() }

releaseDependants { impl_preFunc.(); ^impl_underlyingObject.releaseDependants() }

removeUniqueMethods { impl_preFunc.(); ^impl_underlyingObject.removeUniqueMethods() }

inspect { impl_preFunc.(); ^impl_underlyingObject.inspect()}

inspectorClass { impl_preFunc.(); ^impl_underlyingObject.inspectorClass()}

inspector { impl_preFunc.(); ^impl_underlyingObject.inspector() }

crash { impl_preFunc.(); ^impl_underlyingObject.crash() }

stackDepth { impl_preFunc.(); ^impl_underlyingObject.stackDepth() }

dumpStack { impl_preFunc.(); ^impl_underlyingObject.dumpStack() }

dumpDetailedBackTrace { impl_preFunc.(); ^impl_underlyingObject.dumpDetailedBackTrace() }

freeze { impl_preFunc.(); ^impl_underlyingObject.freeze() }

beats_ { impl_preFunc.(); ^impl_underlyingObject.beats_() }

isUGen { impl_preFunc.(); ^impl_underlyingObject.isUGen()}

numChannels { impl_preFunc.(); ^impl_underlyingObject.numChannels()}

clock_ { impl_preFunc.(); ^impl_underlyingObject.clock_() }

asTextArchive { impl_preFunc.(); ^impl_underlyingObject.asTextArchive() }

asBinaryArchive { impl_preFunc.(); ^impl_underlyingObject.asBinaryArchive() }

help { impl_preFunc.(); ^impl_underlyingObject.help()}

asArchive { impl_preFunc.(); ^impl_underlyingObject.asArchive() }

initFromArchive { impl_preFunc.(); ^impl_underlyingObject.initFromArchive()}

archiveAsCompileString { impl_preFunc.(); ^impl_underlyingObject.archiveAsCompileString()}

archiveAsObject { impl_preFunc.(); ^impl_underlyingObject.archiveAsObject()}

checkCanArchive { impl_preFunc.(); ^impl_underlyingObject.checkCanArchive()}

isInputUGen { impl_preFunc.(); ^impl_underlyingObject.isInputUGen()}

isOutputUGen { impl_preFunc.(); ^impl_underlyingObject.isOutputUGen()}

isControlUGen { impl_preFunc.(); ^impl_underlyingObject.isControlUGen()}

source { impl_preFunc.(); ^impl_underlyingObject.source()}

asUGenInput { impl_preFunc.(); ^impl_underlyingObject.asUGenInput()}

asControlInput { impl_preFunc.(); ^impl_underlyingObject.asControlInput()}

asAudioRateInput { impl_preFunc.(); ^impl_underlyingObject.asAudioRateInput()}

slotSize { impl_preFunc.(); ^impl_underlyingObject.slotSize() }

getSlots { impl_preFunc.(); ^impl_underlyingObject.getSlots() }

instVarSize { impl_preFunc.(); ^impl_underlyingObject.instVarSize()}

do { arg function; impl_preFunc.(); ^impl_underlyingObject.do( function ); }

generate { arg function, state; impl_preFunc.(); ^impl_underlyingObject.generate( function, state ); }

isKindOf { arg aClass; impl_preFunc.(); ^impl_underlyingObject.isKindOf( aClass ); }

isMemberOf { arg aClass; impl_preFunc.(); ^impl_underlyingObject.isMemberOf( aClass ); }

respondsTo { arg aSymbol; impl_preFunc.(); ^impl_underlyingObject.respondsTo( aSymbol ); }

performMsg { arg msg; impl_preFunc.(); ^impl_underlyingObject.performMsg( msg ); }

perform { arg selector ... args; impl_preFunc.(); ^impl_underlyingObject.perform( selector, *args ); }

performList { arg selector, arglist; impl_preFunc.(); ^impl_underlyingObject.performList( selector, arglist ); }

superPerform { arg selector ... args; impl_preFunc.(); ^impl_underlyingObject.superPerform( selector, *args ); }

superPerformList { arg selector, arglist; impl_preFunc.(); ^impl_underlyingObject.superPerformList( selector, arglist ); }

tryPerform { arg selector ... args; impl_preFunc.(); ^impl_underlyingObject.tryPerform( selector, *args ); }

multiChannelPerform { arg selector ... args; impl_preFunc.(); ^impl_underlyingObject.multiChannelPerform( selector, *args ); }

performWithEnvir { arg selector, envir; impl_preFunc.(); ^impl_underlyingObject.performWithEnvir( selector, envir ); }

performKeyValuePairs { arg selector, pairs; impl_preFunc.(); ^impl_underlyingObject.performKeyValuePairs( selector, pairs ); }

dup { arg n ; impl_preFunc.(); ^impl_underlyingObject.dup( n ); }

! { arg n; impl_preFunc.(); ^(impl_underlyingObject ! n); }

== { arg obj; impl_preFunc.(); ^(impl_underlyingObject == obj); }

!= { arg obj; impl_preFunc.(); ^(impl_underlyingObject != obj); }

=== { arg obj; impl_preFunc.(); ^(impl_underlyingObject === obj); }

!== { arg obj; impl_preFunc.(); ^(impl_underlyingObject !== obj); }

equals { arg that, properties; impl_preFunc.(); ^impl_underlyingObject.equals( that, properties ); }

compareObject { arg that, instVarNames; impl_preFunc.(); ^impl_underlyingObject.compareObject( that, instVarNames ); }

instVarHash { arg instVarNames; impl_preFunc.(); ^impl_underlyingObject.instVarHash( instVarNames ); }

|==| { arg that; impl_preFunc.(); ^(impl_underlyingObject |==| that); }

|!=| { arg that; impl_preFunc.(); ^(impl_underlyingObject |!=| that); }

prReverseLazyEquals { arg that; impl_preFunc.(); ^impl_underlyingObject.prReverseLazyEquals( that ); }

-> { arg obj; impl_preFunc.(); ^(impl_underlyingObject -> obj); }

first { arg inval; impl_preFunc.(); ^impl_underlyingObject.first( inval ); }

cyc { arg n; impl_preFunc.(); ^impl_underlyingObject.cyc( n ); }

fin { arg n; impl_preFunc.(); ^impl_underlyingObject.fin( n ); }

repeat { arg repeats; impl_preFunc.(); ^impl_underlyingObject.repeat( repeats ); }

nextN { arg n, inval; impl_preFunc.(); ^impl_underlyingObject.nextN( n, inval ); }

streamArg { arg embed; impl_preFunc.(); ^impl_underlyingObject.streamArg( embed ); }

composeEvents { arg event; impl_preFunc.(); ^impl_underlyingObject.composeEvents( event ); }

? { arg obj; impl_preFunc.(); ^(impl_underlyingObject ? obj); }

?? { arg obj; impl_preFunc.(); ^(impl_underlyingObject ?? obj); }

!? { arg obj; impl_preFunc.(); ^(impl_underlyingObject !? obj); }

matchItem { arg item; impl_preFunc.(); ^impl_underlyingObject.matchItem( item ); }

falseAt { arg key; impl_preFunc.(); ^impl_underlyingObject.falseAt( key ); }

pointsTo { arg obj; impl_preFunc.(); ^impl_underlyingObject.pointsTo( obj ); }

subclassResponsibility { arg method; impl_preFunc.(); ^impl_underlyingObject.subclassResponsibility( method ); }

doesNotUnderstand { arg selector ... args; impl_preFunc.(); ^impl_underlyingObject.doesNotUnderstand( selector, *args ); }

shouldNotImplement { arg method; impl_preFunc.(); ^impl_underlyingObject.shouldNotImplement( method ); }

outOfContextReturn { arg method, result; impl_preFunc.(); ^impl_underlyingObject.outOfContextReturn( method, result ); }

immutableError { arg value; impl_preFunc.(); ^impl_underlyingObject.immutableError( value ); }

deprecated { arg method, alternateMethod; impl_preFunc.(); ^impl_underlyingObject.deprecated( method, alternateMethod ); }

printClassNameOn { arg stream; impl_preFunc.(); ^impl_underlyingObject.printClassNameOn( stream ); }

printOn { arg stream; impl_preFunc.(); ^impl_underlyingObject.printOn( stream ); }

storeOn { arg stream; impl_preFunc.(); ^impl_underlyingObject.storeOn( stream ); }

storeParamsOn { arg stream; impl_preFunc.(); ^impl_underlyingObject.storeParamsOn( stream ); }

simplifyStoreArgs { arg args; impl_preFunc.(); ^impl_underlyingObject.simplifyStoreArgs( args ); }

storeModifiersOn { arg stream; impl_preFunc.(); ^impl_underlyingObject.storeModifiersOn( stream ); }

as { arg aSimilarClass; impl_preFunc.(); ^impl_underlyingObject.as( aSimilarClass ); }

deepCollect { arg depth, function, index, rank ; impl_preFunc.(); ^impl_underlyingObject.deepCollect( depth, function, index , rank ); }

deepDo { arg depth, function, index , rank ; impl_preFunc.(); ^impl_underlyingObject.deepDo( depth, function, index , rank ); }

bubble { arg depth, levels; impl_preFunc.(); ^impl_underlyingObject.bubble( depth, levels); }

obtain { arg index, default; impl_preFunc.(); ^impl_underlyingObject.obtain( index, default ); }

instill { arg index, item, default; impl_preFunc.(); ^impl_underlyingObject.instill( index, item, default ); }

addFunc { arg ... functions; impl_preFunc.(); ^impl_underlyingObject.addFunc(*functions ); }

removeFunc { arg function; impl_preFunc.(); ^impl_underlyingObject.removeFunc( function ); }

replaceFunc { arg find, replace; impl_preFunc.(); ^impl_underlyingObject.replaceFunc( find, replace ); }

addFuncTo { arg variableName ... functions; impl_preFunc.(); ^impl_underlyingObject.addFuncTo( variableName, *functions ); }

removeFuncFrom { arg variableName, function; impl_preFunc.(); ^impl_underlyingObject.removeFuncFrom( variableName, function ); }

while { arg body; impl_preFunc.(); ^impl_underlyingObject.while( body ); }

switch { arg ... cases; impl_preFunc.(); ^impl_underlyingObject.switch(*cases ); }

yieldAndReset { arg reset ; impl_preFunc.(); ^impl_underlyingObject.yieldAndReset( reset ); }

idle { arg val; impl_preFunc.(); ^impl_underlyingObject.idle( val ); }

changed { arg what ... moreArgs; impl_preFunc.(); ^impl_underlyingObject.changed( what, *moreArgs ); }

addDependant { arg dependant; impl_preFunc.(); ^impl_underlyingObject.addDependant( dependant ); }

removeDependant { arg dependant; impl_preFunc.(); ^impl_underlyingObject.removeDependant( dependant ); }

update { arg theChanged, theChanger; impl_preFunc.(); ^impl_underlyingObject.update( theChanged, theChanger ); }

addUniqueMethod { arg selector, function; impl_preFunc.(); ^impl_underlyingObject.addUniqueMethod( selector, function ); }

removeUniqueMethod { arg selector; impl_preFunc.(); ^impl_underlyingObject.removeUniqueMethod( selector ); }

& { arg that; impl_preFunc.(); ^(impl_underlyingObject & that); }

| { arg that; impl_preFunc.(); ^(impl_underlyingObject | that); }

% { arg that; impl_preFunc.(); ^(impl_underlyingObject % that); }

** { arg that; impl_preFunc.(); ^(impl_underlyingObject ** that); }

<< { arg that; impl_preFunc.(); ^(impl_underlyingObject << that); }

>> { arg that; impl_preFunc.(); ^(impl_underlyingObject >> that); }

+>> { arg that; impl_preFunc.(); ^(impl_underlyingObject +>> that); }

<! { arg that; impl_preFunc.(); ^(impl_underlyingObject <! that); }

blend { arg that, blendFrac; impl_preFunc.(); ^impl_underlyingObject.blend( that, blendFrac ); }

blendAt { arg index, method; impl_preFunc.(); ^impl_underlyingObject.blendAt( index, method); }

blendPut { arg index, val, method; impl_preFunc.(); ^impl_underlyingObject.blendPut( index, val, method); }

fuzzyEqual { arg that, precision; impl_preFunc.(); ^impl_underlyingObject.fuzzyEqual( that, precision); }

pair { arg that; impl_preFunc.(); ^impl_underlyingObject.pair( that ); }

pairs { arg that; impl_preFunc.(); ^impl_underlyingObject.pairs( that ); }

awake { arg beats, seconds, clock; impl_preFunc.(); ^impl_underlyingObject.awake( beats, seconds, clock ); }

performBinaryOpOnSomething { arg aSelector, thing, adverb; impl_preFunc.(); ^impl_underlyingObject.performBinaryOpOnSomething( aSelector, thing, adverb ); }

performBinaryOpOnSimpleNumber { arg aSelector, thing, adverb; impl_preFunc.(); ^impl_underlyingObject.performBinaryOpOnSimpleNumber( aSelector, thing, adverb ); }

performBinaryOpOnSignal { arg aSelector, thing, adverb; impl_preFunc.(); ^impl_underlyingObject.performBinaryOpOnSignal( aSelector, thing, adverb ); }

performBinaryOpOnComplex { arg aSelector, thing, adverb; impl_preFunc.(); ^impl_underlyingObject.performBinaryOpOnComplex( aSelector, thing, adverb ); }

performBinaryOpOnSeqColl { arg aSelector, thing, adverb; impl_preFunc.(); ^impl_underlyingObject.performBinaryOpOnSeqColl( aSelector, thing, adverb ); }

performBinaryOpOnUGen { arg aSelector, thing, adverb; impl_preFunc.(); ^impl_underlyingObject.performBinaryOpOnUGen( aSelector, thing, adverb ); }

writeDefFile { arg name, dir, overwrite; impl_preFunc.(); ^impl_underlyingObject.writeDefFile( name, dir, overwrite ); }

slotAt { arg index; impl_preFunc.(); ^impl_underlyingObject.slotAt( index ); }

slotPut { arg index, value; impl_preFunc.(); ^impl_underlyingObject.slotPut( index, value ); }

slotKey { arg index; impl_preFunc.(); ^impl_underlyingObject.slotKey( index ); }

slotIndex { arg key; impl_preFunc.(); ^impl_underlyingObject.slotIndex( key ); }

slotsDo { arg function; impl_preFunc.(); ^impl_underlyingObject.slotsDo( function ); }

slotValuesDo { arg function; impl_preFunc.(); ^impl_underlyingObject.slotValuesDo( function ); }

setSlots { arg array; impl_preFunc.(); ^impl_underlyingObject.setSlots( array ); }

instVarAt { arg index; impl_preFunc.(); ^impl_underlyingObject.instVarAt( index ); }

instVarPut { arg index, item; impl_preFunc.(); ^impl_underlyingObject.instVarPut( index, item ); }

writeArchive { arg pathname; impl_preFunc.(); ^impl_underlyingObject.writeArchive( pathname ); }

writeTextArchive { arg pathname; impl_preFunc.(); ^impl_underlyingObject.writeTextArchive( pathname ); }

getContainedObjects { arg objects; impl_preFunc.(); ^impl_underlyingObject.getContainedObjects( objects ); }

writeBinaryArchive { arg pathname; impl_preFunc.(); ^impl_underlyingObject.writeBinaryArchive( pathname ); }

}

AutoPromise : ObjectPreCaller {

var <>priv_condVar;

var <>priv_isSafe;

*new{

var self = super.new()

.priv_isSafe_(false)

.priv_condVar_(CondVar());

self.impl_preFunc = {

if(self.priv_isSafe.not, {

try

{ self.priv_condVar.wait({ self.priv_isSafe }) }

{ |er|

if((er.class == PrimitiveFailedError) && (er.failedPrimitiveName == '_RoutineYield'),

{ AutoPromise.prGenerateError(self.class.name).error.throw },

{ er.throw } // some other error

)

}

})

};

^self

}

// only call this once in normal use

impl_addUnderlyingObject { |obj|

impl_underlyingObject = obj

}

impl_markSafe {

priv_isSafe = true;

priv_condVar.signalAll;

}

// used to wrap the functions that the child class explicitly defines

doesNotUnderstand { |selector ... args|

this.impl_preFunc.();

^this.impl_underlyingObject.perform(selector.asSymbol, *args)

}

*prGenerateError { |className|

^className ++ "'s value has not completed,"

+ "either use it in a Routine/Thread, or,"

+ "literally wait until the resource has loaded and try again"

}

}

+ Buffer {

*readAP { |server, path, startFrame=0, numFrames=(-1), action|

var r = AutoPromise();

var buffer = Buffer.read(

server: server,

path: path,

startFrame: startFrame,

numFrames: numFrames,

action: { |buf|

r.impl_markSafe();

action !? {action.(buf)};

}

);

r.impl_addUnderlyingObject(buffer);

^r

}

at {|index|

var r = AutoPromise();

this.get(index, action: {|v|

r.impl_addUnderlyingObject(v);

r.impl_markSafe();

});

^r

}

}

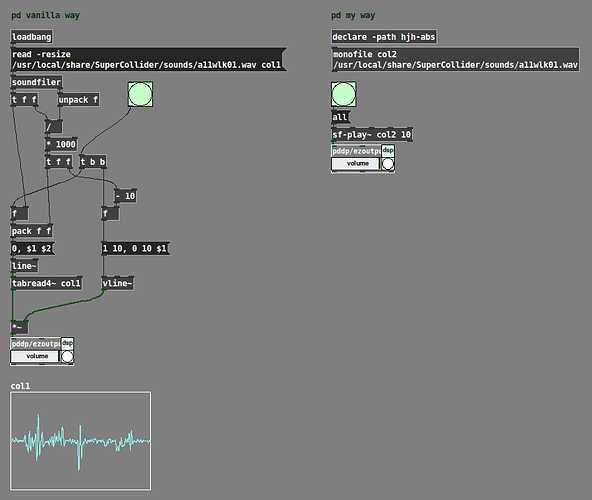

usage

This is what it looks like to use, I’ve also made it work for Buffer.get as it can seamlessly wrap any type.

Basic forked

fork {

~b = Buffer.readAP(s, ~path);

~b.numFrames.postln; // waits automatically

}

Basic no fork

~b = Buffer.readAP(s, ~path);

// wait a second

~b.numFrames; //has been updated behind the scenes, does not wait

Wrapping Buffer.get

fork {

~b = Buffer.readAP(s, ~path);

~result = ~b[41234]; // waits on ~b, calls Buffer.get -- returns an AutoPromise

format("result + 1 = %", ~result + 1).postln; // waits on ~result

}

~b = Buffer.readAP(s, ~path);

// wait a second

~result = ~b[41234];

// wait a second

format("result + 1 = %", ~result + 1).postln;

There is an issue here though…

fork {

~b = Buffer.readAP(s, ~path);

~result = ~b[41234]; // waits on ~b, calls Buffer.get -- returns an AutoPromise

format("1 + result = %", 1 + ~result).postln; // waits on ~result

}

… doing 1 + ~result does not work as no message has been sent. I don’t know if this is a simple change or not as you might just be able to call value inside Number.add with little consequence? Ultimately the issue is that it uses a primitive here. Instead, you get an error as the current ‘value’ of ~result is nil.

Benchmarks

@shiihs

Okay turns out I was … sort of…

Basic single buffer = the same

s.waitForBoot {

{

var b = Buffer.readAP(s, ~path) ;

b.numFrames.postln;

b.free;

}.bench // time to run: 0.12787021299999 seconds.

}

s.waitForBoot {

{

var b = Buffer.read(s, ~path) ;

s.sync;

b.numFrames.postln;

b.free;

}.bench // time to run: 0.12784386300001 seconds.

}

10 Buffers = the same.

Now this assumes you call sync, but it breaks on my system if you don’t.

This is the one that I thought would be faster, but for some reason it isn’t? Not a big deal as it is at least no slower.

s.waitForBoot {

{

~bufs = 10.collect({

Buffer.readAP(s, ~path)

});

~bufs[0].numFrames.postln;

}.bench; //time to run: 1.227028303 seconds.

~bufs.do(_.free);

}

s.waitForBoot {

{

~bufs = 10.collect({

Buffer.read(s, ~path)

});

s.sync;

~bufs[0].numFrames.postln;

}.bench; // time to run: 1.230603833 seconds.

~bufs.do(_.free);

}

Drawbacks

- Doesn’t work with keyword arg function calls (original purpose of this thread) — solvable, but definitively not trivial.

- If you wrap it in normal parenthesis,

(...), it will only sometimes throw an error — I’ve added a custom error message to make this more obvious. @jamshark70’s suggestion of change the interpreter to always fork fixes this, but might have a performance cost, although since this is only ever evaluated once at a time, it might be minor?

- Hides the true nature of the server/client relationship — I don’t think this is a drawback at all, and you might as well argue that

Buffer hides the fact everything is sending OSC messages.

- One down side is that things like

SynthDef do not work if you have many being defined in parallel, and AutoPromise approach can be unclear if something is run in parallel. I don’t think this is AutoPromise’s fault, it is SynthDef’s and the change should be there.

- When passing some AutoPromise’d to a method that calls a primitive, this doesn’t count as a message, so no sync is done — it might not be possible to solve this mean the commutative property is broken in some cases (this does not apply to Buffer as it isn’t a primitive or used in any primitive calls).

I still think this is the best solution for Buffer (with always fork in interpreter).

- User just writes the code as they would as if

s.sync didn’t exist.

- It is always safe.

- The performance is okay.

- Almost completely backwards compatible — we don’t have to wait for the messiah of SC4 to arise.

- If this is applied to other classes, there would be no reason to teach the client/server split or even mention synchronous/asynchronous programming in the introduction of supercollider — that is the biggest win in my mind.

As a way to fix the primitives, there could be an extra method added to Object called impl_touch, which just does nothing and would need to be called before any _XPritimitive primitive is called… Otherwise primitive types might just have to be explicitly waited on.