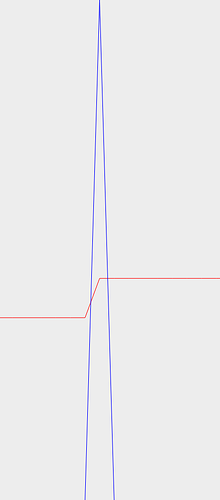

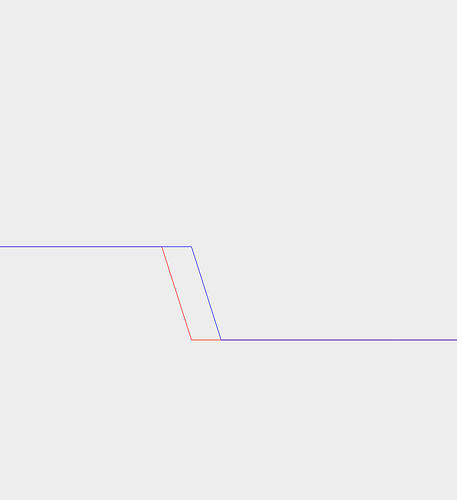

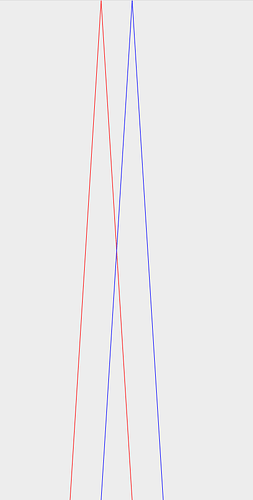

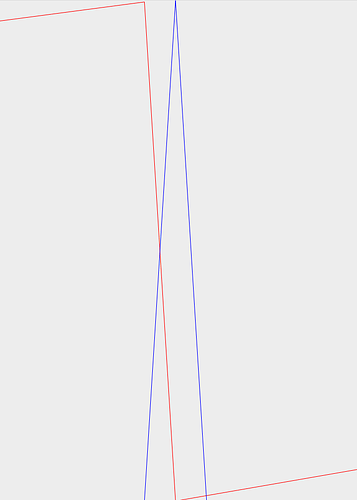

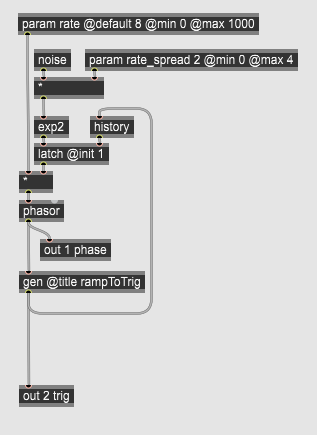

hey, here is a version using an array of random values (created by the same 2 ** (mod * modDeph) logic), a “one-shot measure phase” and a “one-shot burst phase” and derive triggers from them. You dont have to create the measurePhase and the measureTrigger, but wanted to show the different ramp and trigger logic for both of them

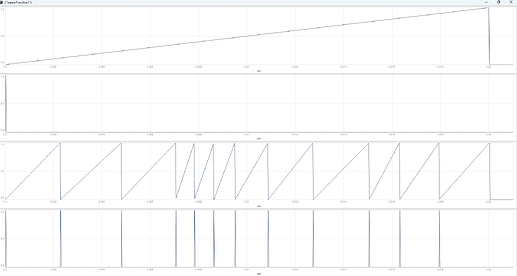

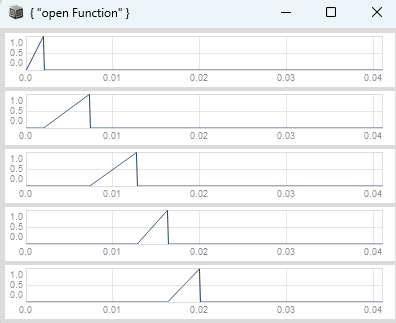

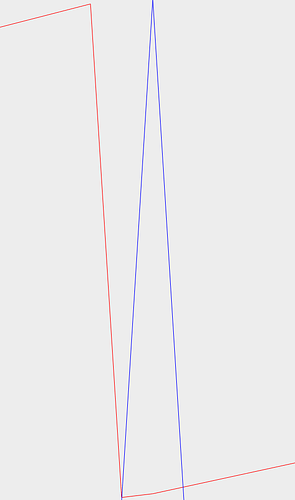

Here is the plot:

(

var getSubDivs = { |trigIn, arrayOfSubDivs, duration|

var trig = Trig1.ar(trigIn, SampleDur.ir);

var hasTriggered = PulseCount.ar(trig) > 0;

var subDiv = Ddup(2, Dseq(arrayOfSubDivs, numOfSubDivs)) * duration;

Duty.ar(subDiv, trig, subDiv) * hasTriggered;

};

var rampOneShot = { |trigIn, duration, cycles = 1|

var trig = Trig1.ar(trigIn, SampleDur.ir);

var hasTriggered = PulseCount.ar(trig) > 0;

var phase = Sweep.ar(trig, 1 / duration).clip(0, cycles);

phase * hasTriggered;

};

var oneShotRampToTrig = { |phase|

var compare = phase > 0;

var delta = HPZ1.ar(compare);

delta > 0;

};

var oneShotBurstsToTrig = { |stepPhase|

var phaseStepped = stepPhase.ceil;

var delta = HPZ1.ar(phaseStepped);

delta > 0;

};

var randomness = 1;

var numOfSubDivs = 12;

var arrayOfSubDivs = Array.fill(numOfSubDivs, { 2 ** (rrand(-1.0, 1.0) * randomness) } ).normalizeSum;

{

var initTrigger, duration;

var measurePhase, measureTrigger;

var seqOfSubDivs, stepPhase, stepTrigger;

initTrigger = \trig.tr(1);

duration = \duration.kr(0.02);

measurePhase = rampOneShot.(initTrigger, duration).wrap(0, 1);

measureTrigger = oneShotRampToTrig.(measurePhase);

seqOfSubDivs = getSubDivs.(initTrigger, arrayOfSubDivs, duration);

stepPhase = rampOneShot.(initTrigger, seqOfSubDivs, numOfSubDivs);

stepTrigger = oneShotBurstsToTrig.(stepPhase);

stepPhase = stepPhase.wrap(0, 1);

[measurePhase, measureTrigger, stepPhase, stepTrigger];

}.plot(0.021);

)

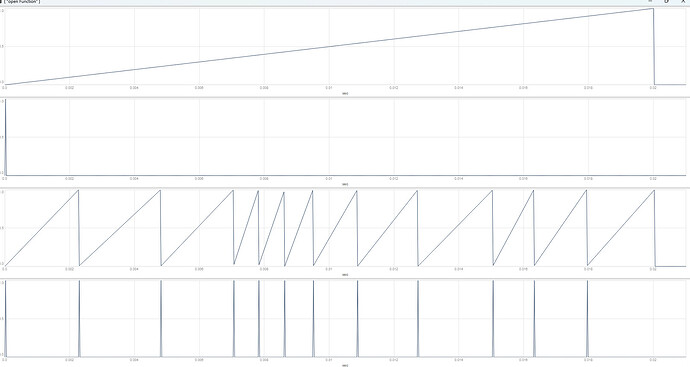

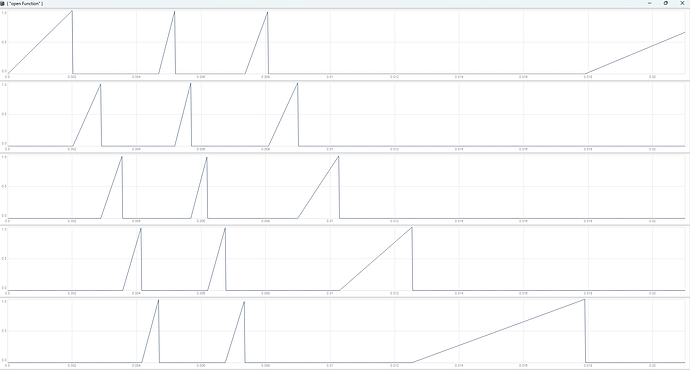

Here triggered by Pmono:

(

var getSubDivs = { |trig, arrayOfSubDivs, numOfSubDivs, duration|

var hasTriggered = PulseCount.ar(trig) > 0;

var subDiv = Ddup(2, (Dseq(arrayOfSubDivs, numOfSubDivs).dpoll * duration));

Duty.ar(subDiv, trig, subDiv) * hasTriggered;

};

var rampOneShot = { |trig, duration, cycles|

var hasTriggered = PulseCount.ar(trig) > 0;

var phase = Sweep.ar(trig, 1 / duration).clip(0, cycles);

phase * hasTriggered;

};

var oneShotBurstsToTrig = { |phaseScaled|

var phaseStepped = phaseScaled.ceil;

var delta = HPZ1.ar(phaseStepped);

delta > 0;

};

var rampToSlope = { |phase|

var history = Delay1.ar(phase);

var delta = (phase - history);

delta.wrap(-0.5, 0.5);

};

var accum = { |trig|

Duty.ar(SampleDur.ir, trig, Dseries(0, 1));

};

SynthDef(\burst, {

var initTrigger, arrayOfSubDivs, numOfSubDivs;

var seqOfSubDivs, stepPhaseScaled, stepPhase, stepTrigger;

var stepSlope, windowSlope, accumulator, windowPhase, window;

var grainSlope, grainPhase, sig;

initTrigger = Trig1.ar(\trig.tr(0), SampleDur.ir);

arrayOfSubDivs = \arrayOfSubDivs.kr(Array.fill(16, 1));

numOfSubDivs = \numOfSubDivs.kr(12);

initTrigger.poll(initTrigger, \initTrig);

seqOfSubDivs = getSubDivs.(initTrigger, arrayOfSubDivs, numOfSubDivs, \sustain.kr(1));

stepPhaseScaled = rampOneShot.(initTrigger, seqOfSubDivs, numOfSubDivs);

stepTrigger = oneShotBurstsToTrig.(stepPhaseScaled);

stepPhase = stepPhaseScaled.wrap(0, 1);

stepSlope = rampToSlope.(stepPhase);

accumulator = accum.(stepTrigger);

windowSlope = Latch.ar(stepSlope, stepTrigger) / max(0.001, \overlap.kr(0.5));

windowPhase = (windowSlope * accumulator).clip(0, 1);

window = IEnvGen.ar(Env([0, 1, 0], [0.01, 0.99], [4.0, -4.0]), windowPhase);

grainSlope = \freq.kr(440) * SampleDur.ir;

grainPhase = (grainSlope * accumulator).wrap(0, 1);

sig = sin(grainPhase * 2pi);

sig = sig * window;

sig = sig * Env.asr(0.001, 1, 0.001).ar(Done.freeSelf, \gate.kr(1));

sig = sig!2 * 0.1;

Out.ar(\out.kr(0), sig);

}).add;

)

(

var arrayOfSubDivs = Array.fill(12, { 2 ** rrand(-1.0, 1.0) } ).normalizeSum;

arrayOfSubDivs.debug(\arrayOfSubDivs);

Pdef(\burst,

Pmono(\burst,

\trig, 1,

\legato, 1.0,

\dur, 4,

\freq, 440,

\time, Pfunc { |ev| ev.use { ~sustain.value } / thisThread.clock.tempo },

\arrayOfSubDivs, [arrayOfSubDivs],

\numOfSubDivs, 12,

\out, 0,

),

).play;

)