The name overlap is a bit misleading in my example. You will find out that you cant specify overlap bigger then 1 with this attempt. This is because the envelope is created for each trigger and the last envelope would need to exist in parallel to the new one.

This is the same for playing notes on a piano. If you want to play three notes simultaneously, you need at least three fingers. Same principle is true here. For three simultaneously existing EnvGens, you would need at least three voices.

If you use a “one shot” SynthDef instead, where you have specified doneAction 2 for your envelope and use a Pbind as a recipe for an EventStreamPlayer to create Events on the server. The polyphony is taken care of automatically.

If you for example take the example by @rdd, where you specify legato > 1 and open the Node Tree. You can see that two Synth Nodes are created on the server. If you adjust legato to 3. You will create three Synth Nodes on the server.

(

SynthDef('sin') { |out=0 freq=440 sustain=1 amp=0.1|

var osc = SinOsc.ar(freq, 0);

var env = EnvGen.ar(Env.sine(sustain, amp), 1, 1, 0, 1, 2);

OffsetOut.ar(out, osc * env);

}.add

)

(

Pbind(

'instrument', 'sin',

'dur', 0.5,

'legato', 2,

'freq', 800

).play;

)

If you would like to have polyphonic behaviour inside your SynthDef. You have to use multichannel expansion. The easiest way to have the opportunity to overlap the EnvGens inside of your Synthdef would be to use something called “the round-robin method”. For this you have to create multiple channels and distribute your triggers round robin across these channels. Here done with PulseDivider.

(

var multiChannelTrigger = { |numChannels, trig|

numChannels.collect{ |chan|

PulseDivider.ar(trig, numChannels, numChannels - 1 - chan);

};

};

{

var numChannels = 4;

var tFreq = \tFreq.kr(400);

var trig = Impulse.ar(tFreq);

var triggers = multiChannelTrigger.(numChannels, trig);

var durations = min(\overlap.kr(1), numChannels) / tFreq;

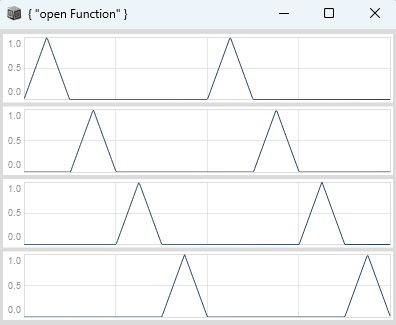

EnvGen.ar(Env([0, 1, 0], [0.5, 0.5], \lin), triggers, timeScale: durations);

}.plot(0.02);

)

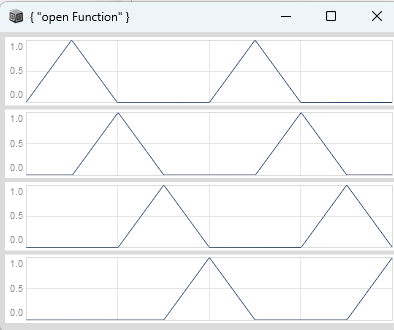

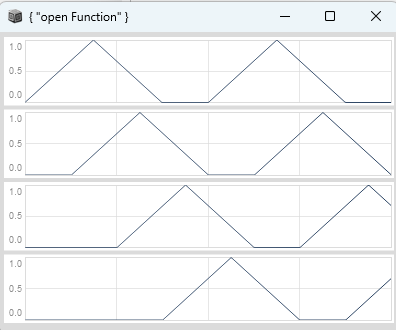

If you now increase overlap you can see that the channels are overlapping:

overlap = 2;

overlap = 3;

etc.

The maximum overlap possible with this attempt is the number of channels which you have to define with SynthDef evaluation and cant be changed afterwards. This is because of how the SynthDef Graph works in SC.

If you instead run this example and specify overlap > 1 and open the Node Tree, you can see that you have just one Synth Node on the server. The polyphony is done inside the SynthDef:

(

var multiChannelTrigger = { |numChannels, trig|

numChannels.collect{ |chan|

PulseDivider.ar(trig, numChannels, numChannels - 1 - chan);

};

};

SynthDef(\multiChannelTest, {

var numChannels = 5;

var tFreq, trig, triggers, envs, sigs, sig, durations, ratios, freqs;

tFreq = \tFreq.kr(4);

trig = Impulse.ar(tFreq);

triggers = multiChannelTrigger.(numChannels, trig);

durations = min(\overlap.kr(3), numChannels) / tFreq;

envs = EnvGen.ar(Env([0, 1, 0], [0.03, 0.97], \lin), triggers, timeScale: durations);

ratios = Dseq([1.0, 1.5258371159539, 1.6603888559977, 1.8068056703405], inf);

freqs = \freq.kr(440) * Demand.ar(triggers, 0, ratios);

sigs = SinOsc.ar(freqs);

sigs = sigs * envs;

sig = sigs.sum;

sig = sig * \amp.kr(-10.dbamp);

sig = Pan2.ar(sig, \pan.kr(0));

Out.ar(\out.kr(0), sig);

}).add;

)

x = Synth(\multiChannelTest, [\tFreq, 4, \freq, 440, \overlap, 1]);

x.free;

I have renamed all the variables, so it is clearer that you have multiple instances of envs, freqs and SinOscs in your Synthdef, you can use .debug to see that in the post window. If you want to play these five channels of audio just on a single pair of stereo speakers, you would have to sum the signals to a single channel and use Pan2.ar afterwards to create a stereo output.

There is a more sophisticated method for polyphony described in the gen~ book called “overdubbing the future”, where you have one single sample buffer reader and several single sample buffer writers to throw events into the future and specify when to trigger them, without having to specify the maximum polyphony. But thats not possible in SC.