I’m not good at computing large number of datas, and I ended up with a counter-intuitive result which I wasn’t able to diagnose, so I’m looking for help if you have any clue about this problem.

[context, skip here if needed]

Here’s the context: I’m trying to mimic Audacity’s file view display, with min, max and rms for each ‘sound area’ a pixel covers.

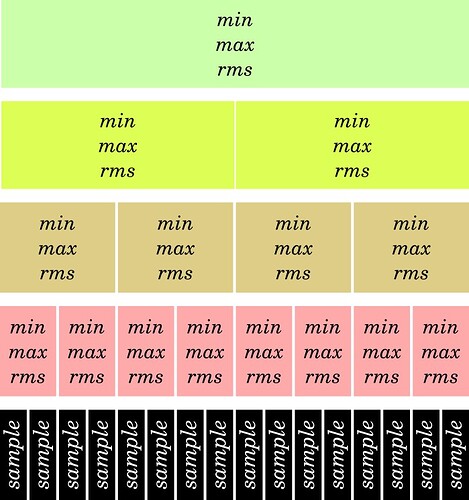

A friend of mine told me that using dyadic rationals might be the fastest way to recompute min, max and rms for a new area. This saturates RAM with a lot of datas in exchange for less CPU usage. Visually:

For each power of 2 ‘samples areas’, we precalculate min, max and rms. When we need to find those data for a certain area, we find the biggest chunk that is located within the boundaries, then do the same on each extremities until it is filled. Then, we only need to calculate min, max and rms once with the least possible number of data.

[end of context, here’s the problem]

Anyway, I tried to generate those layers of data automatically, starting with 2 samples chunk, then constructing subsequent layers on top of the previous one. Each layers has half the number of data the previous one had: it should take half the time to compute. But that is not the case: it takes more and more time to compute less values! I think this has to do with pointers but I’m not sure.

Here is the code. But wait: only try it with a really small sound file. On my computer, a mono file lasting 300ms takes 45 sec to compute! A 4s stereo file never finishes computing and make SC laggy…

(

var getDRData = { |filePath|

var soundFile = SoundFile.openRead(filePath);

var array = FloatArray.newClear(

soundFile.numFrames * soundFile.numChannels

);

var channels;

var data = Array.newClear(soundFile.numChannels);

var fileSize = soundFile.numFrames;

var maxPow = 2;

var nPow = 1;

var sizes;

var min, max, rms;

var current, prev;

soundFile.readData(array);

soundFile.close;

channels = array.unlace(soundFile.numChannels);

while { 2.pow(nPow + 1) < fileSize } {

nPow = nPow + 1;

};

sizes = Array.newClear(nPow);

// Calculate array sizes

nPow.do({ |pow|

var remainder = fileSize % 2.pow(pow + 1);

var size = (fileSize - remainder) / 2.pow(pow + 1);

if(remainder > 0) {

size = size + 1;

};

sizes[pow] = size.asInteger;

});

// Create place holders

data.size.do({ |channel|

data[channel] = Array.newClear(nPow + 1);

nPow.do({ |index|

data[channel][index + 1] = Array.newClear(sizes[index]);

});

});

// Compute datas

channels.do({ |channel, channelIndex|

data[channelIndex][0] = channel;

// 2 samples first

data[channelIndex][1] = Array.newClear(sizes[0]);

(data[channelIndex][1].size - 1).do({ |index|

min = min(channel[index * 2], channel[(index * 2) + 1]);

max = max(channel[index * 2], channel[(index * 2) + 1]);

rms = (channel[index * 2].pow(2) + channel[(index * 2) + 1].pow(2)).sqrt;

data[channelIndex][1][index] = [min, max, rms];

});

// Calculate last index

if((channel.size % 2) == 0) {

min = min(channel[channel.size - 2], channel.last);

max = max(channel[channel.size - 2], channel.last);

rms = (channel[channel.size - 2].pow(2) + channel.last.pow(2)).sqrt;

data[channelIndex][1][data[channelIndex][1].size - 1] = [min, max, rms];

} {

data[channelIndex][1][data[channelIndex][1].size - 1] = Array.fill(3, { channel.last });

};

// HERE COMES THE TROUBLE:

// Calculate other powers of 2:

(nPow - 1).do({ |pow|

"Power: ".post;

2.pow(pow + 1).asInteger.postln;

"Data number: ".post;

data[channelIndex][pow + 2].size.postln;

bench {

(data[channelIndex][pow + 2].size - 1).do({ |index|

min = min(

data[channelIndex][pow + 1][index * 2],

data[channelIndex][pow + 1][(index * 2) + 1]

);

max = max(

data[channelIndex][pow + 1][index * 2],

data[channelIndex][pow + 1][(index * 2) + 1]

);

rms = (

data[channelIndex][pow + 1][index * 2].pow(2) + data[channelIndex][pow + 1][(index * 2) + 1].pow(2)

).sqrt;

data[channelIndex][pow + 2][index] = [min, max, rms];

});

};

bench {

if((data[channelIndex][pow + 1].size % 2) == 0) {

min = min(

data[channelIndex][pow + 1][data[channelIndex][pow + 1].size - 2],

data[channelIndex][pow + 1].last

);

max = max(

data[channelIndex][pow + 1][data[channelIndex][pow + 1].size - 2],

data[channelIndex][pow + 1].last

);

rms = (

data[channelIndex][pow + 1][data[channelIndex][pow + 1].size - 2].pow(2) + data[channelIndex][pow + 1].last.pow(2)

).sqrt;

data[channelIndex][pow + 2][data[channelIndex][pow + 2].size - 1] = [min, max, rms];

} {

data[channelIndex][pow + 2][data[channelIndex][pow + 2].size - 1] = Array.fill(3, { data[channelIndex][pow + 1].last });

};

};

"".postln;

});

});

};

getDRData.value(thisProcess.nowExecutingPath.dirname +/+ "filename.wav");

)

Thanks for your attention,

Simon