hey, while creating the GrainDelay, i have figured out a way to implement a dynamic VoiceAllocator. This comes in handy for server side polyphony (especially for granulation), instead of using the round-robin method (increment a counter for every received trigger and distribute the next voice to the next channel), the VoiceAllocator finds a channel which is currently free and schedules the next event on that channel, if no channels are available the event gets dropped.

You can grab the latest release here

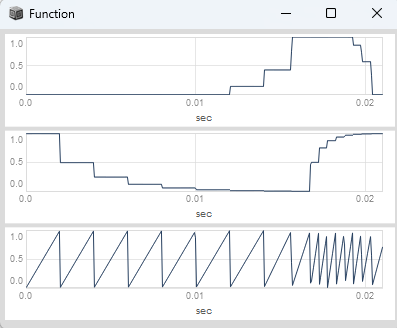

The EventScheduler has two arguments, a triggerRate and reset and outputs:

- a derived trigger on output[0]

- a linear ramp between 0 and 1 on output[1]

- a derived and latched rate in hz on output[2] output[1]

- a derived sub-sample-offset on output[3] output[2]

its own rate is sampled and held for every ramp cycle, which means we make sure the internal ramps are linear and between 0 and 1, while beeing modulated.

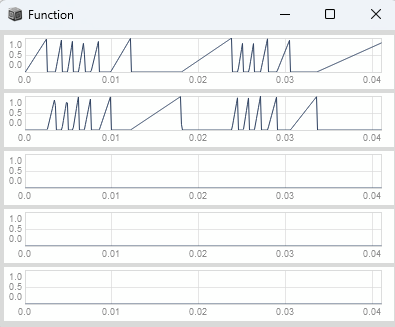

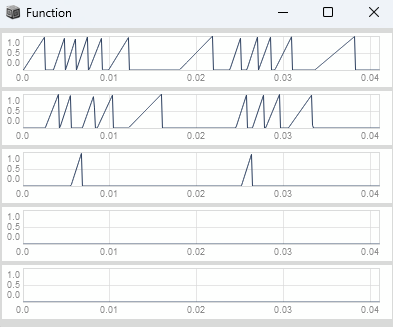

The VoiceAllocator has four arguments, numChannels, a trigger, a rate and a sub-sample offset and outputs:

- an array of sub-sample accurate phases

- an array of triggers

This setup then allows you to:

- modulate the trigger rate of the

EventScheduler, without distorting its phase and calculate thesub-sample offsetfor one of its outputs - distribute the events across the channels via the

VoiceAllocatorand make sure the channel where you distribute your next voice to, is currently free.

These things in combination then enable:

- trigger frequency modulation for audio ratchets without distorting the phase

- overlapping grains while the events have different durations without distorting the phase

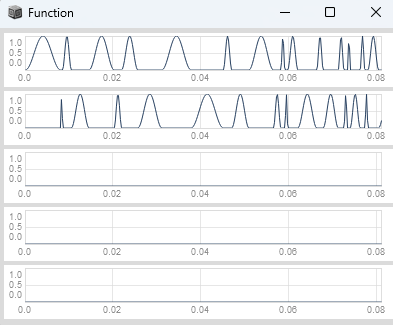

Currently the interface is a bit convoluted, but i hope i can think this through (try out adjusting the numChannels, and look at the plot when adjusting tFreqMD and overlapMD):

(

{

var numChannels = 5;

var reset, tFreqMD, tFreq;

var overlapMD, overlap;

var events, voices, phases, triggers;

var sig;

reset = Trig1.ar(\reset.tr(0), SampleDur.ir);

tFreqMD = \tFreqMD.kr(2);

tFreq = \tFreq.kr(400) * (2 ** (SinOsc.ar(50) * tFreqMD));

overlapMD = \overlapMD.kr(0);

overlap = \overlap.kr(1) * (2 ** (SinOsc.ar(50) * overlapMD));

events = EventScheduler.ar(tFreq, reset);

voices = VoiceAllocator.ar(

numChannels: numChannels,

trig: events[0],

rate: events[2] / overlap,

subSampleOffset: events[3],

);

phases = voices[0..numChannels - 1];

triggers = voices[numChannels..numChannels * 2];

phases;

}.plot(0.041);

)

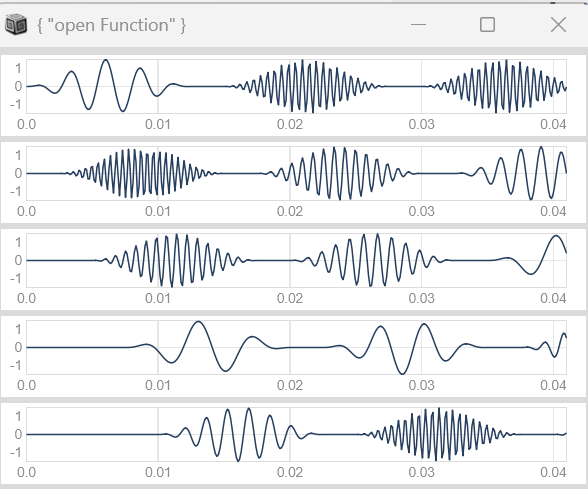

In the context of granular synthesis this setup allows you to generate windowPhases to drive an arbitrary stateless window function (have a look here) from your multichannel phase output of VoiceAllocator, while using the sub-sample offset output of EventScheduler and the multichannel trigger output of VoiceAllocator to accumulate or integrate your grainPhases which are driving your carrier oscillator.

The reason we cant put this all together in one Ugen is, that we want to be able to use the multichannel windowPhases from VoiceAllocator to be able to drive modulators used for FM of the multichannel grain frequencies and we additionally need the multichannel trigger from VoiceAllocator and the subsample offset from EventScheduler to pass it to the grainPhase accumulator / integrator to reset its phase and add the sub-sample offset.

Here is a still bit convoluted test example, where i have been adding the multichannel accumulator for the grainphase manually in sc:

(

var accumulatorSubSample = { |trig, subSampleOffset|

var hasTriggered = PulseCount.ar(trig) > 0;

var accum = Duty.ar(SampleDur.ir, trig, Dseries(0, 1)) * hasTriggered;

accum + subSampleOffset;

};

var multiChannelAccumulator = { |triggers, subSampleOffsets|

triggers.collect{ |localTrig, i|

accumulatorSubSample.(localTrig, subSampleOffsets[i]);

};

};

var multiChannelDwhite = { |triggers|

var demand = Dwhite(-1.0, 1.0);

triggers.collect{ |localTrig|

Demand.ar(localTrig, DC.ar(0), demand)

};

};

{

var numChannels = 8;

var reset, tFreqMD, tFreq;

var overlapMD, overlap;

var events, voices, windowPhases, triggers;

var sig;

var grainFreqMod, grainFreqs, grainSlopes, grainPhases, sigs;

var grainWindows;

reset = Trig1.ar(\reset.tr(0), SampleDur.ir);

tFreqMD = \tFreqMD.kr(2);

tFreq = \tFreq.kr(10) * (2 ** (SinOsc.ar(0.3) * tFreqMD));

overlapMD = \overlapMD.kr(0);

overlap = \overlap.kr(1) * (2 ** (SinOsc.ar(0.3) * overlapMD));

events = EventScheduler.ar(tFreq, reset);

voices = VoiceAllocator.ar(

numChannels: numChannels,

trig: events[0],

rate: events[2] / overlap,

subSampleOffset: events[3],

);

windowPhases = voices[0..numChannels - 1];

triggers = voices[numChannels..numChannels * 2 - 1];

grainWindows = HanningWindow.ar(windowPhases, \skew.kr(0.1));

grainFreqMod = multiChannelDwhite.(triggers);

grainFreqs = \freq.kr(440) * (2 ** (grainFreqMod * \freqMD.kr(2)));

grainSlopes = grainFreqs * SampleDur.ir;

grainPhases = (grainSlopes * multiChannelAccumulator.(triggers, Latch.ar(events[3], triggers))).wrap(0, 1);

sigs = sin(grainPhases * 2pi);

sigs = sigs * grainWindows;

sigs = PanAz.ar(2, sigs, \pan.kr(0));

sig = sigs.sum;

sig!2 * 0.1;

}.play;

)

If you have additional ideas let me know ![]()