I’m deeply interested in granular synthesis and have been working to understand it both technically and conceptually. Besides learning how to implement it in SuperCollider, are there any other resources you could recommend for exploring this topic in greater depth?

Mictosounds by Curtis Roads is one of the definitive texts.

The Grain UGens in SC are heavily influenced by the kinds of controls that Barry Truax used for his work in the 80s (especially paired with the Tendency quark).

Best

Josh

/*

Josh Parmenter

www.realizedsound.net/josh

*/

I definitely need to read it when i find time!

The chapter on granular synthesis from the SuperCollider book is quite helpful too

I think Road’s Microsound book is a classic, but it doesn’t cover enough implementation details. Granular synthesis is pretty conceptually simple but the technical implementations can be quite complex, and it depends at what level you are optimising (like any parallel/particle system or similar).

The best granular synthesis guides I have found are in the Generating Sound and Organizing Time book, Ross Bencina’s article, and studying Kentaro Suzuki’s ~gen patchers; There is frankly a lot of work that has been done over the last 5-10 years in granular through Max/MSP and ~gen, so it might be worth looking into that world to translate some of the patches, although I have seen some good faust patches too.

Alik Rustamoff/Reflectives also has some really good SC specific tutorials on Youtube that are a little more in depth than just using the granular UGens. Also @dietcv’s recent thread (his work draws upon the Generating Sound book)

If you really want to go deep, check out the source code for Emilie Gillet’s Mutable Clouds model on github.

hey, didnt know the article by Ross Bencina. Thats really cool.

In my opinion “generating sound organizing time” is the most up to date book which goes in the nitty gritty details of implementing granular synthesis where other literature falls short. Its very well written and easy to follow.

I also found some use in Miller Puckettes book “The theory and technique of electronic music” together with some of his classes for implementing granular synthesis. But he often times has his own terminology for things (for example wavepackets) which doesnt match with what other authors are writing.

Definitely. Code examples (Alberto’s Chapter 16) can be found here:

Note the incluced and necessary extension. Also, this refers to an old SC-version, replace memStore by add.

miSCellaneous_lib contains the tutorials ‘Buffer Granulation’ and ‘Live Granulation’ (without buffering). Other classes of miSCellaneous also refer to granulation: DX suite (multichannel envelopes), PbindFx (granulation with effects), Sieves and Psieve patterns, ZeroXBufRd / TZeroXBufRd (wavesets), PLbindef / PLbindefPar (granulation and live coding).

Here’s another live granulation strategy not included in the tutorial:

i think that there are different attempts of investigating the same topic, some work which has already been done and some work which is currently done. Have you made an attempt for asynchronous granular scheduling while maintaining linear phases between 0 and 1. The attempt in the “Real-Time Attack and Decay Control of grain envelope for Granular Synthesis” is based on Impulse and the round-robin method via PulseDivider, which only gives you synchronous grain scheduling without distorting the phases.

In the linked example, the decision for synchronous granular synthesis and PulseDivider probably happened for the sake of simplicity in a study context. It could be done in a different way, either by defining rhythms or rhythmic distortions (e.g. taking TDuty, maybe in combo with UGens) and/or producing the multichannel envelopes with DX UGens.

There are examples with the latter in the DX suite help files, although I have not tried that out myself with this live granulation idea, might be worth it.

Concerning the phase distortion, I maybe don’t understand your argument, the idea of the example is live granulation without buffers and altering the incoming signals. This is independent from synchronicity/asynchronicity of granulation. However, phase distortion could also be built in via delay modulation on the sources – which, under the hood, again introduces buffering ![]()

hey, what i mean with phase distortion is not an additional “phase shaping” effect. its the fact that if you modulate the trigger frequency of your grain scheduler based on Impulse, the phases of your grain window wont be linear and between 0 and 1 but truncated. What you want for asynchronous granular scheduling is that the grain window is read by a phase which is linear and between 0 and 1 and is not truncating the window shape.

I don’t hear and see a truncation. I’ve adapted the example so that it outputs just the envelopes by feeding it with a DC. Also when changing the pulseFreq, the windows remain smooth.

BTW, a Delay was already built in. Now I’ve set the default params to zero to observe it better.

// boot with extended resources

(

s.options.numWireBufs = 64 * 16;

s.options.memSize = 8192 * 64;

s.reboot;

)

(

~maxNumChannels = 2;

~inBus = Bus.audio(s, ~maxNumChannels);

~maxDelay = 0.2;

)

(

SynthDef(\granulator_envTest, {

arg outBus = 0, overallAtt = 0.01, overallRel = 0.1, grainDurInMs = 1,

pulseFreq = 150, numChannels = 10, channelOffset = 0,

pos = 0, posDev = 0, delayRange = 0,

gate = 1, preAmp = 1, limitAmp = 0.1;

var maxGrainDur = ~maxNumChannels / pulseFreq - 0.001;

var maxDelay = 20;

var grainEnv = Env.sine(min(maxGrainDur, grainDurInMs * 0.001));

var trig = Impulse.ar(pulseFreq);

// multichannel trigger

var trigs = { |i|

PulseDivider.ar(trig, ~maxNumChannels, ~maxNumChannels - 1 - i)

} ! ~maxNumChannels;

// multichannel envelope for Grains (multichannel expansion because of trigs)

var grainEnvGens = EnvGen.ar(grainEnv, trigs);

// global env

var envGen = EnvGen.ar(Env.asr(overallAtt, 1, overallRel), 1, doneAction: 2);

// multichannel in signal is wrapped if numChannels < maxNumChannels

var in = { |i|

Select.ar(i % numChannels + channelOffset % ~maxNumChannels, In.ar(~inBus, ~maxNumChannels))

} ! ~maxNumChannels;

var grains = in * grainEnvGens * envGen * preAmp;

var sig;

// core workhorse, each grain gets own position

// array of stereo signals

sig = { |i|

var localPos = (pos.lag(1) + TRand.ar(posDev.neg, posDev, trigs[i])).clip(-1, 1);

var localDelay = LFDNoise3.ar(0.1).range(0, delayRange.lag(1) * 0.001);

Pan2.ar(DelayL.ar(grains[i], ~maxDelay, localDelay), localPos)

} ! ~maxNumChannels;

// mix to stereo

Out.ar(0, Limiter.ar(Mix(sig), limitAmp))

}, metadata: (

specs: (

grainDurInMs: [1, 20, 3, 0, 0.1],

pulseFreq: [1, 200, 3, 0, 10],

pos: [-1, 1, \lin, 0, 0],

posDev: [0, 0.2, \lin, 0, 0.25],

delayRange: [0, 10, 2, 0, 0],

numChannels: [1, ~maxNumChannels, \lin, 1, ~maxNumChannels],

channelOffset: [0, ~maxNumChannels - 1, \lin, 1, 0],

preAmp: [0, 3, \lin, 0, 0.5],

limitAmp: [0, 0.5, 2, 0, 0.1]

)

)

).add;

)

(

SynthDescLib.global[\granulator_envTest].makeGui;

s.scope;

s.freqscope;

)

// start gui

// DC input for granulation

x = { Out.ar(~inBus, DC.ar(1 ! ~maxNumChannels)) }.play

x.free

s.scope

// stop granulator

hey, thanks for providing the example but the trigger frequency here isnt modulated by an LFO or providing asynchronous triggers in a different way. If you set it by the GUI its synchronous.

Even if it’s modulated by an LFO (or even a fast LFO, param pulseFreqModFreq), I don’t observe any truncation at all. See the version below.

Might it be that your truncation case relates to a situation with specific circumstances different from the ones here ?

// boot with extended resources

(

s.options.numWireBufs = 64 * 16;

s.options.memSize = 8192 * 64;

s.reboot;

)

(

~maxNumChannels = 2;

~inBus = Bus.audio(s, ~maxNumChannels);

~maxDelay = 0.2;

)

(

SynthDef(\granulator_envTest_2, {

arg outBus = 0, overallAtt = 0.01, overallRel = 0.1, grainDurInMs = 1,

pulseFreq = 150, pulseFreqDev = 0.1, pulseFreqModFreq = 1, numChannels = 10, channelOffset = 0,

pos = 0, posDev = 0, delayRange = 0,

gate = 1, preAmp = 1, limitAmp = 0.1;

var maxGrainDur = ~maxNumChannels / pulseFreq - 0.001;

var maxDelay = 20;

var grainEnv = Env.sine(min(maxGrainDur, grainDurInMs * 0.001));

var trig = Impulse.ar(pulseFreq * SinOsc.ar(pulseFreqModFreq).range(1 - pulseFreqDev, 1 + pulseFreqDev));

// multichannel trigger

var trigs = { |i|

PulseDivider.ar(trig, ~maxNumChannels, ~maxNumChannels - 1 - i)

} ! ~maxNumChannels;

// multichannel envelope for Grains (multichannel expansion because of trigs)

var grainEnvGens = EnvGen.ar(grainEnv, trigs);

// global env

var envGen = EnvGen.ar(Env.asr(overallAtt, 1, overallRel), 1, doneAction: 2);

// multichannel in signal is wrapped if numChannels < maxNumChannels

var in = { |i|

Select.ar(i % numChannels + channelOffset % ~maxNumChannels, In.ar(~inBus, ~maxNumChannels))

} ! ~maxNumChannels;

var grains = in * grainEnvGens * envGen * preAmp;

var sig;

// core workhorse, each grain gets own position

// array of stereo signals

sig = { |i|

var localPos = (pos.lag(1) + TRand.ar(posDev.neg, posDev, trigs[i])).clip(-1, 1);

var localDelay = LFDNoise3.ar(0.1).range(0, delayRange.lag(1) * 0.001);

Pan2.ar(DelayL.ar(grains[i], ~maxDelay, localDelay), localPos)

} ! ~maxNumChannels;

// mix to stereo

Out.ar(0, Limiter.ar(Mix(sig), limitAmp))

}, metadata: (

specs: (

grainDurInMs: [1, 20, 3, 0, 0.1],

pulseFreq: [1, 200, 3, 0, 10],

pulseFreqDev: [0, 0.2, 0, 0, 0],

pulseFreqModFreq: [0, 50, 0, 0, 0],

pos: [-1, 1, \lin, 0, 0],

posDev: [0, 0.2, \lin, 0, 0.25],

delayRange: [0, 10, 2, 0, 0],

numChannels: [1, ~maxNumChannels, \lin, 1, ~maxNumChannels],

channelOffset: [0, ~maxNumChannels - 1, \lin, 1, 0],

preAmp: [0, 3, \lin, 0, 0.5],

limitAmp: [0, 0.5, 2, 0, 0.1]

)

)

).add;

)

(

SynthDescLib.global[\granulator_envTest_2].makeGui;

s.scope;

s.freqscope;

)

// start gui

// DC input for granulation

x = { Out.ar(~inBus, DC.ar(1 ! ~maxNumChannels)) }.play

x.free

s.scope

// stop granulator

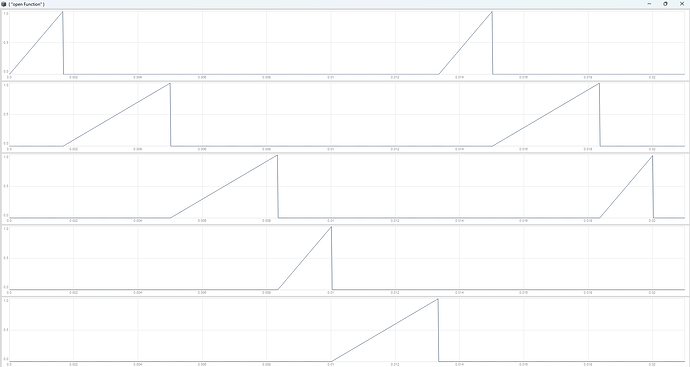

here you can see the truncation of your windows while beeing modulated, you want to see windows of different length distributed across the channels but the phase should be linear and between 0 and 1, so the window shape should be consistant while the trigger frequency is being modulated. The modulation of the trigger frequency should only change the distribution and the duration of linear phases between 0 and 1, but not the shape of the window:

(

var multiChannelTrigger = { |numChannels, trig|

numChannels.collect{ |chan|

PulseDivider.ar(trig, numChannels, numChannels - 1 - chan);

};

};

{

var numChannels = 4;

var tFreq, maxGrainDur, trigMod, trig, triggers;

trigMod = SinOsc.ar(\tFreqMF.kr(50));

tFreq = \tFreq.kr(150) * (2 ** (trigMod * \tfreqMD.kr(2)));

trig = Impulse.ar(tFreq);

triggers = multiChannelTrigger.(numChannels, trig);

maxGrainDur = numChannels / tFreq;

EnvGen.ar(Env.sine(maxGrainDur), triggers);

}.plot(0.04);

)

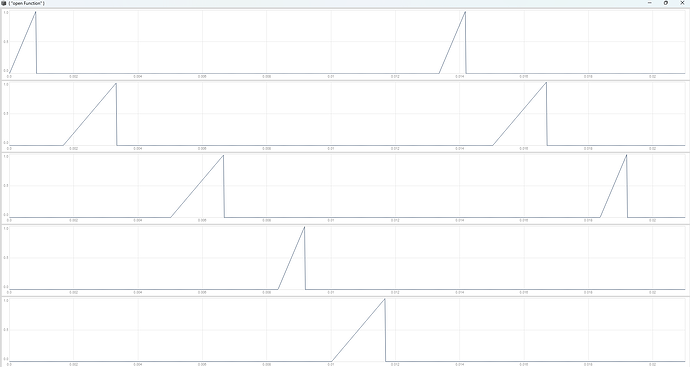

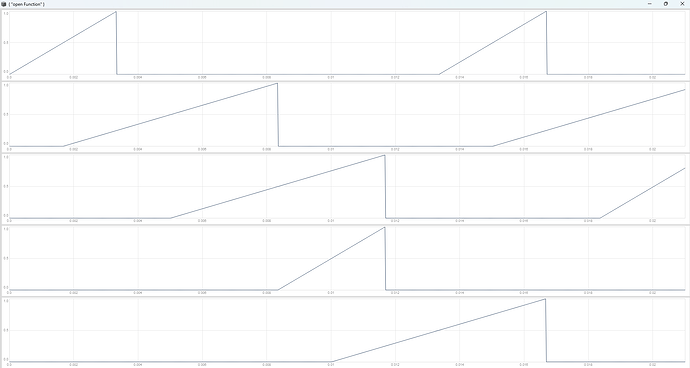

The desired output are linear ramps between 0 and 1, which are distributed round-robin across the channels (one event comes after the other, no gaps, no truncation). These linear phases are driving your window function:

of course you can adjust your window duration by a global overlap param, here at 0.5

and here overlap at 2:

i have been testing alot with Duty (the results have been inaccurate, read the whole thread: https://scsynth.org/t/sub-sample-accurate-granulation-with-random-periods/).

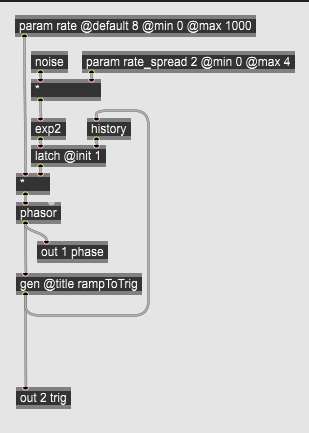

The only way is a phasor, where you derive a trigger from, which latches its own modulation in a single-sample feedback loop, to get linear ramps between 0 and 1 with random periods:

here my simple gen implementation, which works nicely:

hey, i just want to make sure that my posts are read as beeing driven by curiousity. They are not ment to denigrate your great work.

No worries, let’s look at the data.

The envelopes are blurred in the plot of your multichannel trigger example, as it is expected. They are not truncated – that would likely produce audible clicks.

But you’re constructing an example with extreme values, the frequency is rapidly changing (at a freq of 50 Hz !) between 37.5 Hz (150 * (2 ** -2)) and 600 Hz (150 * (2 ** 2)), let’s isolate that modulation:

{

var trigMod = SinOsc.ar(\tFreqMF.kr(50));

\tFreq.kr(150) * (2 ** (trigMod * \tfreqMD.kr(2)))

}.plot(0.1)

That, of course, causes extremely blurred envelopes. In addition, you’re using kr which makes the envelopes edgy – with ar they are still blurred, but smoothly.

In my last example I have given above, there’s a trigger rate deviation of 10 % up or down at max! No truncation, no aliasing, no distortion from the envelopes. In probably most of my use cases of granulation I would either change the rate rather smoothly with server-triggered granulation or use language-triggered granulation where I can easily change the envelope from grain to grain anyway.

So, even with server-triggered granulation, I might have mildly blurred envelopes, but they are smooth anyway (most important!) and in combination with arbitrary sources (possibly recordings or live input) that is not an issue at all. It’s just what you expect from that implementation and I’m fine with it soundwise.

You can also see this effect in some plot examples of DX suite, e.g. DXEnvFan. I would rather not want to keep the modulation values for each envelope. If I would, I could probably build in a Latch construct.

To sum up, I think it’s about the use case and what you expect !

There’s no canonical way of making grain envelopes – as there’s no canonical way of doing synthesis in general ![]()

I see that you have put a lot of effort into this topic, so you might regularly deal with setups where you just prefer a different convention. I can well imagine that there could be great differences with exactly pitched input signals, especially synthesized sources.

hey, thanks for our message ![]()

With all my investigations i try to establish objective createria which can be evaluated.

Some of these might be:

- sub-sample accurate events derived from a continuous phasors ramp between 0 and 1 dont produce aliasing for high trigger rates

- if your ramps are linear and between 0 and 1, your window shapes wont be distorted but only scaled in duration depending on the current slope of your scheduling phasor

- if you use a continous phasor as your grain scheduler you know how much time has elapsed after the last phasors wrap and the time until the next phasors wrap, every change in rate is represented by the phasors slope. With no additional user input the duration of the grain windows should match exactly the period of the grain scheduling phasor. Thats impossible with trigger based scheduling (sidenote: under the hood Impulse is also a ramp where triggers are derived from, most clocks in dsp are)

- if you use stateless functions driven by a ramp between 0 and 1 for your grain windows. Their output depend only on the instantaneous input of the ramp signal. A segmented envelope is divided into discrete time segments. The output depends on which segment is currently active. The shape of a stateless function (skew, width etc.) can be modulated at audio rate.

In some circumstances it might help to implement a well thought threshold, which limits the users input to a range which makes sure the desired output is always predictable, independent of the users input. In my opinion this threshold should not limit the users input to an “unreasonable” small range. For example I have described one possible threshold for the overlap param when working with overlapping phases with random periods and the round-robin method as a reasonable tradeoff in my granulation thread.

I would agree and disagree with that. In SC are currently two canonical ways of doing granulation, one is based on Impulse the other on events from the language via Pbind or a Routine. Both of them are not fullfilling all the criteria from above. My motivation here is not to say how things should be done, but share the outcome of my investigations which might have a few objective advantages over these two approaches and therefore could lead to unexplored synthesis territory. After reading the new submission call for speculative sound synthesis, i think that my approach of questioning established attempts, is a reasonable thing to do.

One side story here: A well established way for resampling audio material in SC is to use a combination of Impulse and Latch. The aliasing created here is not coming from the downsampling itself but from the fact that this implementation of a sample and hold is not sub-sample accurate.

From a musical perspective i can think of two use cases (list definitely incomplete) where you want to have a grain scheduler with random periods, which could mean modulation of the trigger rate or creating sequences of random periods in a different way, for example a sequence of random durations with Duty.

1.) a small amount of randomness can interrupt the periodicity of the metallic comb response, which results in a “more pleasing” musical result. With this in place you can compose the initial soundfile out of overlapping grains, without adding too much artifacts, sub-sample accuracy is also helping here.

2.) a higher amount of randomness can lead to all sorts of noisy textures

The one im mostly interested in is to bypass the separation of microsound and regular event sequencing to move continously between rhythm and tone, which needs the higher modulation depths. Interestingly enough this dates back to the beginning of computer music, but with recent developments in DAWs, in particular Live 12 the focus has even more shifted towards the manipulation of MIDI data, which makes this separation even more pronounced.

Currently i cant see a reason why when using a suitable implementation (for example: phasor latches its own modulation input with a derived trigger in a single-sample feedback loop) only a slight amount of modulation should lead to linear phases but a higher amount of modulation not. One possible threshold when the modulation is implemented with 2 ** (mod * index) could be index <= 1 (min: 0.5 and max: 2). But this needs further investigation.

The only thing one could say is, im just using a slight amount of modulation and the slight distortion of my windows isnt important to me.