Pretty late reply here, but this is a common issue and the thread mostly devolved into hardware troubleshooting rather than what the solutions should be in general. Basically, there are only two simple solutions: master clock on the client or master clock on the server.

The former has been explained in some threads here e.g. search for TempoClick; it issues “clicks”, meaning triggers, on a server bus, which you then have to use to trigger stuff over there, as appropriate. It’s also more or less what the usual “synth spam” via pattern.play does, i.e. synths are short lived so the long-term master clock of the composition is essentially on the client.

If the synths need to be longer lived, for example: a “manual” turntable scratch effect that varies the play rate of a syth under direct user control (e.g. MouseX etc.) then you might need to have the master clock on the server. This means that you send SendTrig events from there to the client and no longer play patterns but you instantiate them asStream and pull next values from them in an OSCdef, values that you use e.g. to set something on the server. This OSCdef basically replaces the EventStreamPlayer with an “on demand” version, where the demands now come from the server. (If this is not clear enough, I can post some code… just ask.)

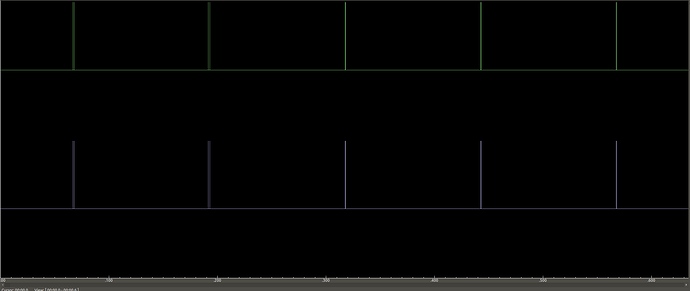

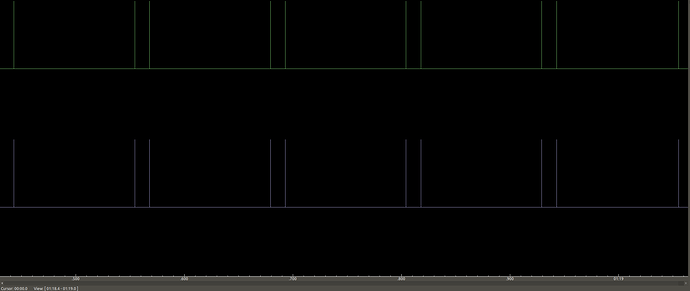

The biggest issue with this is client-server latency (s.latency). With the default settings, you have 0.2s latency, which is pretty huge in some cases. If you lower it, you’ll get some “late” messages. Typically 10 to 80 milliseconds jitter are to be expected on a zero-latency setting, at least on a Windows box.

I suppose you could try to concoct some kind of two-way synchronization by the combining the above somehow, but it’s going to be harder. While stuff like NTP (which does that so some extent, but there are ultimate atomic time sources in the network) is wonderful between computers, it’s probably not very feasible to implement it real-time so as to sync client server clocks, even at a virtual level. If you look at some VST-related discussions having to choose the master clock is basically how stuff is done in the world of audio.

TempoBusClock is a fake solution for this kind of synchronization, by the way. If you look at its trivial source it does nothing in terms of synchronization except set a tempo parameter server-side, which your synths are supposed to deal with. That’s not a real synchronization if the clocks drift apart.