Hello

Is there any framework, script, app that translates the sounds produced in supercollider to a graphic score…somehow I would like to be able to work supercollider with an orchestra, to be able to express in a conventional score what is expressed in an algorithmic composition. I would also welcome ideas to try to do this with supercollider and another programming language, python javascript or whatever.

Regards

Sounds like the start of a PhD project.

You might consider animated or real-time notation, the decibel score player comes to mind, along with Richard Hoadley https://rhoadley.net/ and inscore.

How you map from sound to score will have a big impact on the piece and would be worth considering a part of the piece in itself.

For non-real-time work (for the sound that has already been produced), it seems useful to use the spectrogram in the following applications in the score:

- grm acousmograph (free)

- sonic visualiser (free)

- audacity (free)

- reaper (can be used like free)

- izotope RX

- Adobe Audition

For real-time sound processing by on-the-fly code evaluation, descriptive graphics from a display seem to be better than spectrograms.

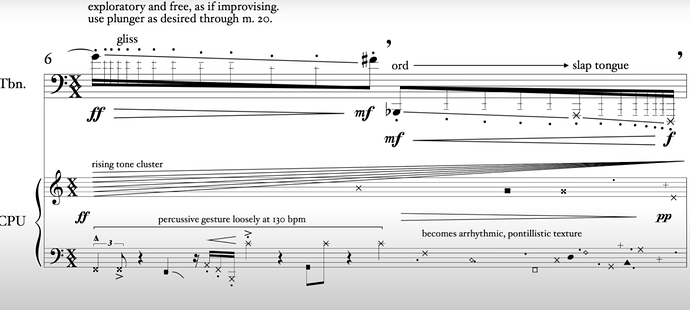

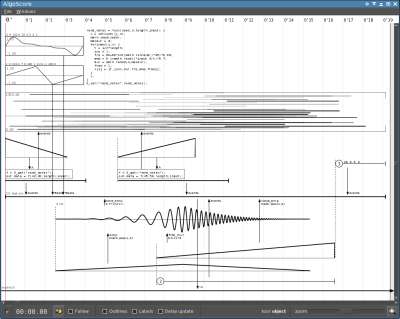

I’m looking for something like this screenshot. It is from algoscore which is currently discontinued. It was for Csound and the process was the reverse of what I am looking for. That is, from Supercollider to graphics.

Sorry, I don’t have any helpful information for you.

The algoscore you mentioned above is one of the features I would like to see in SuperCollider. If it exists, I would like to use it.

One more apology, because I would like to mention the following off-topic examples:

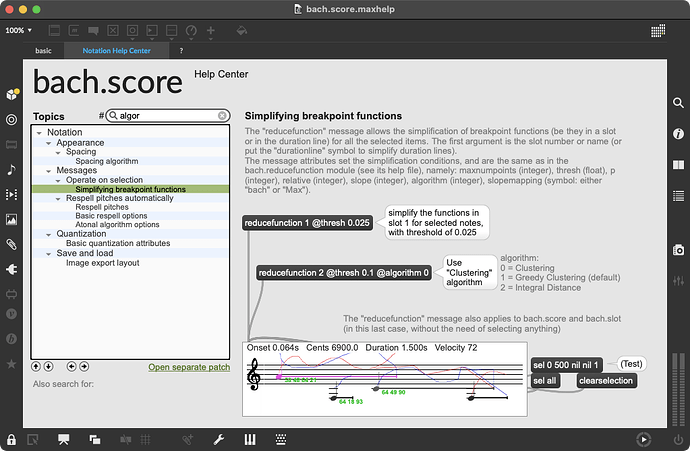

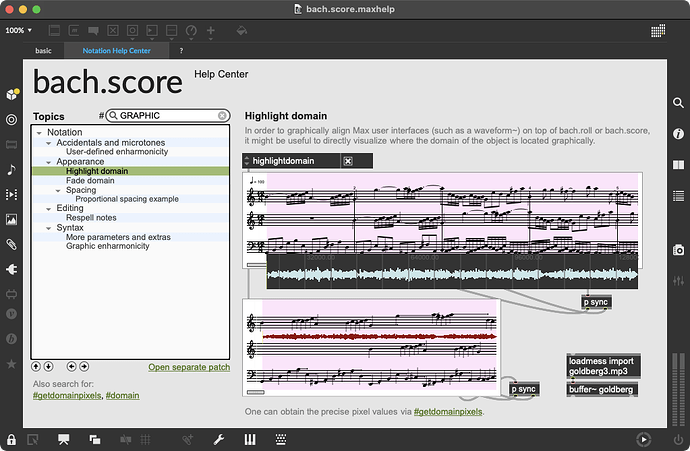

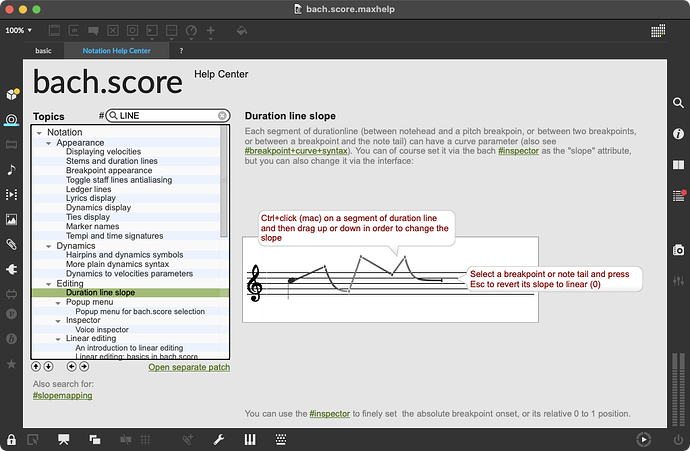

- there is something a bit slightly similar to algoscore in Max, I think, but it is more like a score notator. Inserting code snippets seems to be possible (can be a bit time-consuming as the annotation appears in one line). I always dreamed that SuperCollider would have such a feature.

- Otherwise, Dorico can be an alternative choice. Its graphic import is very good and you can also use some horizontal and vertical lines. Moreover, inserting code snippets with multiple lines is also very easy via staff text and system text.

Anyway, thanks for asking, and sorry for listing unrelated things. I look forward to reading other users’ answers!

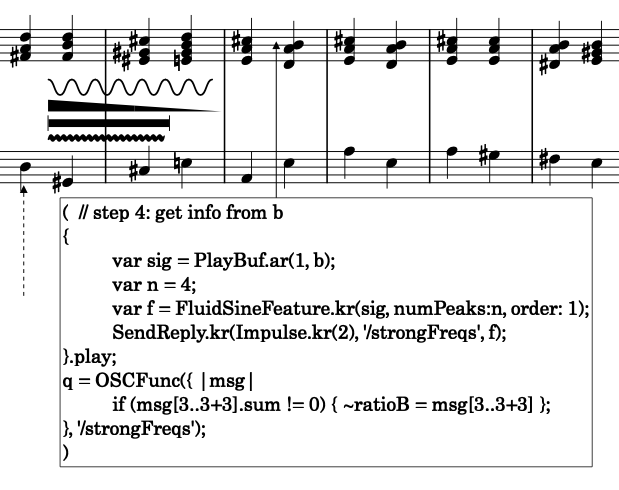

I’ve been working on this universe of things for some time. My tool of choice for visualization has been processing, but my visual approach is not very “canonical”, although musicians understand it perfectly!

Check this:

where the sound generation part is made in openframeworks (c++) through ofxsupercollider libraries,

which send amplitude and pitch data to Processing (through OSC) for visualization

also, check one of the concerts made using this systems: Contorns |.

The musicians read the score on a tv on the floor. In one of the parts, they switch to a traditional score, as we found it was too difficult for them to read the graphic format (shame on me!)

there’s also GitHub - n-armstrong/fosc: A SuperCollider API for generating musical notation in LilyPond.

It looks nice. I’ve been with Openframeworks for a while, but learning it takes up a lot of time that I don’t have and distracts me from what I really care about, which is sound and music. I’ve seen Eli Fieldsteal’s works, but as I understand it, the notation form of the Supercollider part is very laborious, with a It looks nice. I’ve been with Openframeworks for a while, but learning it takes up a lot of time that I don’t have and distracts me from what I really care about, which is sound and music. I’ve seen Eli Fieldsteal’s works, but as I understand it, the notation form of the Supercollider part is very laborious, with a pedal.

pedal.

did you found any other way for this problem ?

I need the same thing…