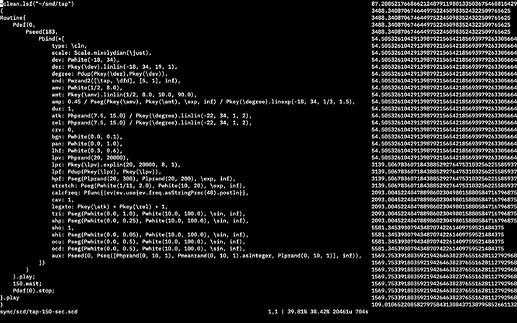

Thanks so much to @scztt and all those in attendance for a great Meetup yesterday! Someone asked about the noisy synth I showed briefly; here’s the SynthDef:

SynthDef(\xFeedNoise,{

var bufnum = \bufnum.kr;

var val = FluidBufToKr.kr(bufnum,0,33);

var sin = SinOsc.ar(val[1].linexp(0,1,1,12000), mul: val[2]);

var saw = VarSaw.ar(val[3].linexp(0,1,1,12000), width: val[4], mul: val[5]);

var square = LFPulse.ar(val[6].linexp(0,1,1,12000),width: val[7], mul: val[8] * 2,add:-1);

var tri = LFTri.ar(val[9].linexp(0,1,1,12000), mul: val[10]);

var osc = SelectX.ar(val[0].linlin(0,1,0,3),[sin,saw,square,tri]);

var noise0 = SelectX.ar(val[11].linlin(0,1,0,2),[

LFNoise0.ar(val[12].linlin(0,1,0.2,10)),

LFNoise1.ar(val[13].linlin(0,1,0.2,10)),

LFNoise2.ar(val[14].linlin(0,1,0.2,10))

]);

var noise1 = SelectX.ar(val[15].linlin(0,1,0,2),[

LFNoise0.ar(val[16].linlin(0,1,0.2,10)),

LFNoise1.ar(val[17].linlin(0,1,0.2,10)),

LFNoise2.ar(val[18].linlin(0,1,0.2,10))

]);

var sig, sigL, sigR;

var local = LocalIn.ar(2);

sigL = VarSaw.ar(

freq: osc.linexp(-1,1,20,10000) * local[0].linlin(-1,1,0.01,200) + (val[19].linexp(0,1,80,2000) * noise0.range(1,val[20].linlin(0,1,2,10))),

width:local[1].linlin(-1,1,0.01,0.8),

mul: val[21]

);

sigL = RLPF.ar(sigL,val[22].linexp(0,1,20,20000),val[23].linlin(0,1,2.sqrt,0.01)).tanh;

sigL = sigL + CombC.ar(sigL,0.25,val[24].linexp(0,1,0.01,0.25).lag(0.01),val[25]);

sigR = VarSaw.ar(

freq: osc.linexp(-1,1,20,10000) * local[1].linlin(-1,1,0.01,200) + (val[26].linexp(0,1,80,2000) * noise1.range(1,val[27].linlin(0,1,2,10))),

width:local[0].linlin(-1,1,0.01,0.8),

mul: val[28]

);

sigR = RLPF.ar(sigR,val[29].linexp(0,1,20,20000),val[30].linlin(0,1,2.sqrt,0.01)).tanh;

sigR = sigR + CombC.ar(sigR,0.25,val[31].linlin(0,1,0.01,0.25).lag(0.01),val[32]);

sig = [sigL, sigR];

LocalOut.ar(sig);

sig = LeakDC.ar(sig).tanh * -6.dbamp;

sig = sig * Env.asr().ar(2,\gate.kr(1));

Out.ar(\out.kr(), sig * \amp.kr(0));

}).add

The FluidBufToKr class comes from the brilliant FluCoMa library (s/o @tremblap), allowing me to manipulate 33 control values of this synth via a single XY pad - super convenient when one of my hands is busy wrangling a guitar/wiping the sweat out of my eyes…

For some insight into this technique, I recommend @tedmoore’s demo of the basic principle and thereafter @Sam_Pluta’s research with parallel models (an approach I’m also using).

See you all next month!

![]() on the right!

on the right!