hey,

im using a Slider2D and a MIDI Controller (Joystick) to control some Synth parameters and record the xy MIDI data to Ableton Live. These end up on two MIDI channels for x and y. Then i would adjust the MIDI data in Ableton Live and would like to play it back synchronized to Events in SC. How can i do that?

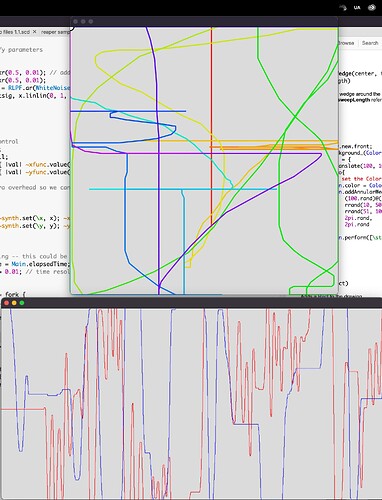

Because the two channels of MIDI Data are not really visualizing the xy movement via Slider2D, I thought another method would be to store and visualize the Slider2D movement in SC and play it back in SC via a triggered ramp or with Events. But then you cant easily ajdust the recorded MIDI Data.

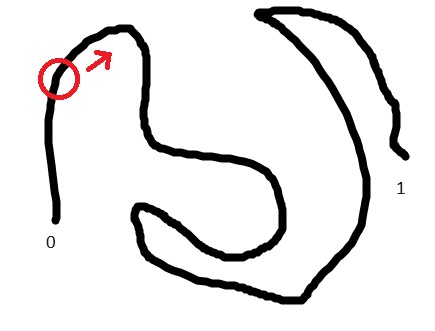

I was imagining something like this for visualisation:

Any thoughts or best practice with this approach?

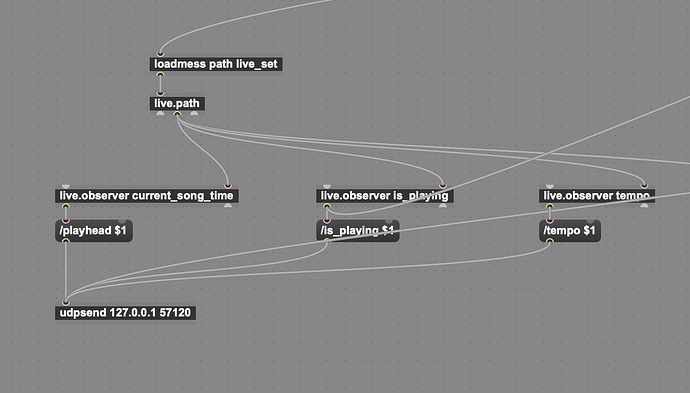

Here is the basic Slider2D MIDI setup:

(

x = Environment.make { |self|

~bufXY = Buffer.alloc(s, 2);

~makeGui = { |self|

var win, pointView, graphView;

win = Window("test", Rect(10, 500, 440, 320)).front;

self.xySlider = Slider2D()

.background_(Color.white.alpha_(0))

.action_{ |view|

self.setLatent(0, view.x, view.y)

};

SkipJack({ self.xySlider.setXY(*self.coords.asArray)

}.defer, 0.01, { self.xySlider.isClosed });

graphView = StackLayout(self.xySlider, View().layout_(

VLayout(pointView).margins_(10)

)).mode_(\stackAll);

win.layout = HLayout([graphView, stretch: 2]);

};

~setLatent = { |self, n ...coords|

self.coords = self.coords ?? { Order[] };

coords.do { |c, k| self.coords[n + k] = c };

self.bufXY.setn(n, coords);

};

~mapMidi = { |self, ccs|

self.midiResponders = self.midiResponders ?? { Order[] };

self.unmapMidi;

ccs.do { |cc, n|

self.midiResponders[cc] = MIDIFunc.cc({ |v, c|

self.setLatent(n, v / 127)

}, cc).fix

}

};

~unmapMidi = { |self| self.midiResponders.do(_.free) };

}.know_(true);

x.mapMidi([0, 1]);

x.makeGui;

)