Context:

I’m currently working on a synth that will take in a bunch of impulse responses (HRTFs) and apply them to a set of channels for binaural rendering. I could simply use Convolution.ar to achieve this, but it would result in a lot of unnecessary FFTs & IFFTs and is pretty computationally expensive with the amount of channels I’m using. Instead, I want to simply do one FFT per input channel, do the necessary operations in frequency domain, then do one IFFT per ear.

As far as I understand it, the only way this would be possible is if I use the FFT / PV UGens. The solution that I’m looking at is to:

- Play back each impulse response (size of one frame) and store the frequency response using PV_RecordBuf

- In a separate synth, take the input channel, FFT it, convolve with the previously saved frequency response through PV_BufRd and PV_Mul, sum with the other channels for the respective ear, then IFFT.

Issue:

In the process of trying out the above solution, I’ve noticed that I can’t seem to get my impulse responses properly stored / played back by PV_RecordBuf and PV_BufRd. I seem to be able to get things that are similar, but nothing that is sample accurate.

Here is a code snippet that helps illustrate what I’m talking about. This is a modification of the PV_BufRd sample:

(

var sf;

// path to a sound file here

p = Platform.resourceDir +/+ "sounds/a11wlk01.wav";

// the frame size for the analysis - experiment with other sizes (powers of 2)

f = 1024;

// the hop size

h = 0.25;

// window type

w = 0;

// get some info about the file

sf = SoundFile.new( p );

sf.openRead;

sf.close;

// allocate memory to store FFT data to... SimpleNumber.calcPVRecSize(frameSize, hop) will return

// the appropriate number of samples needed for the buffer

y = Buffer.alloc(s, sf.duration.calcPVRecSize(f, h));

// allocate the soundfile you want to analyze

z = Buffer.read(s, p);

)

// this does the analysis and saves it to buffer 1... frees itself when done

(

SynthDef("pvrec", { arg recBuf=1, soundBufnum=2;

var in, chain, bufnum;

bufnum = LocalBuf.new(f);

Line.kr(1, 1, BufDur.kr(soundBufnum), doneAction: 2);

in = PlayBuf.ar(1, soundBufnum, BufRateScale.kr(soundBufnum), loop: 0);

// note the window type and overlaps... this is important for resynth parameters

chain = FFT(bufnum, in, h, w);

chain = PV_RecordBuf(chain, recBuf, 0, 1.0, 0.0, h, w);

// no ouput ... simply save the analysis to recBuf

}).add;

)

a = Synth("pvrec", [\recBuf, y, \soundBufnum, z]);

// play your analysis back ... see the playback UGens listed above for more examples.

(

SynthDef("pvplay", { arg out=0, recBuf=1;

var in, chain, bufnum;

bufnum = LocalBuf.new(f);

chain = PV_BufRd(bufnum, recBuf, Line.ar(dur:BufDur.ir(z)));

Out.ar(out, IFFT(chain, w).dup);

}).add;

);

b = Synth("pvplay", [\out, 0, \bufnum, x, \recBuf, y]);

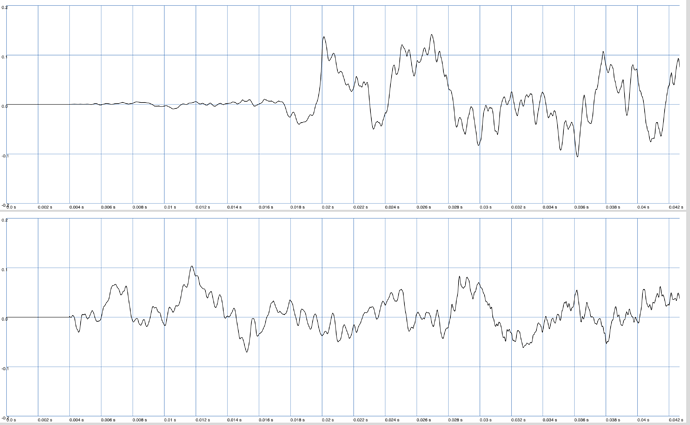

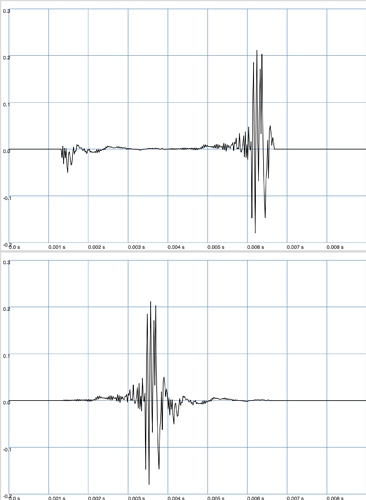

// Plot 2 frames of the signal

// The PlayBuf plot has been delayed to align it with the PV_BufRd playback

// These signals sound the same, but look like their phase is different.

(

{[

IFFT(PV_BufRd(LocalBuf.new(f), y, Line.ar(dur:BufDur.ir(z))), w),

DelayL.ar(PlayBuf.ar(1, z, BufRateScale.kr(z), loop: 0), delaytime:(f * h)-64 * SampleDur.ir)

]

}.plot(2 * f / 48000)

)

My expectation with this code snippet is that recording the sound file with PV_RecordBuf and playing it back with PV_BufRd should return the original signal. Instead, it looks like the phase has been jumbled in some way. Changing the hop size seems to significantly change how it sounds too. Here is the plot I’m generating with the last code block:

Is there something I’m missing here? Does PV_RecordBuf not handle all of the phase information? Or is there some weird misalignment with the frame size, hop size, window type, etc? I’ve tried lots of combinations and can’t seem to come up with the answer.

Any help with the specific PV_RecordBuf / PV_BufRd issue or an alternate solution to the binaural decoder would be greatly appreciated.