One of my conclusions from a previous thread (SuperCollider on Linux) is that on my machine, scsynth is suffering from Jack xruns at much lower CPU loads than other Jack clients. My machine is an Intel nuc running Ubuntu, properly configured to do audio work.

For a test I modified a simple Jack command line client to allow for increasing its load and specifying the amount of increase by means of a command line argument. You can find the source code here.

The phenomenon I observe is that for any given Jack block size, scsynth starts to show xruns at considerably lower CPU load values (in htop) than the test client under otherwise identical conditions.

I can for instance run the test client at a CPU load of 85% and get no xrun for a minute. With scsynth I can only go up to 25%.

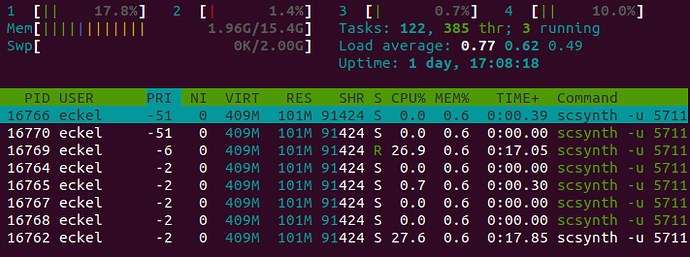

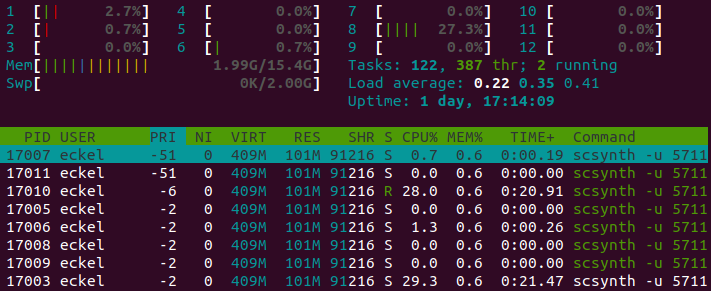

Here is my test in detail, running with an external USB audio interface at 44.1 kHz with Jack settings of 256 frames/period and 2 periods/buffer:

% extra-load-jack-client 17000 : 85%

scsynth running from scide the code below : 25%

(test code suggested by @jamshark70)

(

Server.default.waitForBoot({

SynthDef(\sin10, { |out = 0|

Out.ar(out,

(SinOsc.ar(

NamedControl.kr(\freq, Array.fill(10, 440))

).sum() * 0.0005).dup()

)

}).add();

Server.default.sync();

n = Array.fill(400, {

Synth.new(\sin10, [freq: Array.fill(10, { exprand(200, 800) })])

});

});

)

n.do(_.free);

I would be very much interested to know how this test works out on other machines. The particular configuration of the machine or Jack doesn’t matter for the test. The only interesting aspect to know is if scsynth also underperforms compared to other Jack clients (I also used pd before for comparison).

So the question is how much difference there is in CPU load between scsynth and other Jack clients at the point where they don’t suffer from xruns for a certain time interval and under otherwise identical conditions.

For your test you would adjust the argument to extra-load-jack-client (17000 in my case) and the number of synths in SC (400 in my case).