I am trying to find a way to determine (or maybe more realistically, estimate) wether a buffer holds mono- or polyphonic material. I am not trying to extract the fundamentals, I just need to be able to determine if the buffer holds a single note (monophonic) or several notes (polyphonic). I have had a look at some of the Fluid Ugens, like FluidBufChroma but I am not sure how to use the information produced by the analysis to solve this.

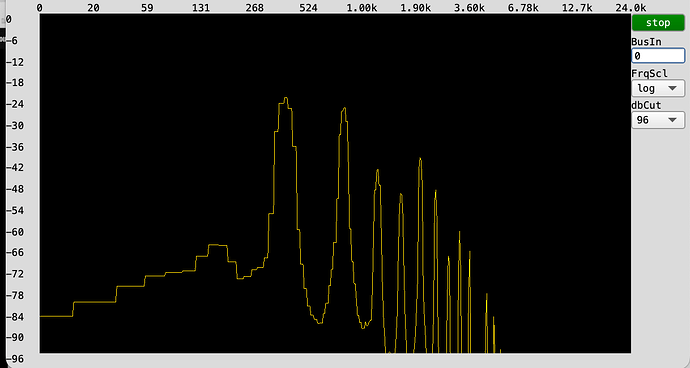

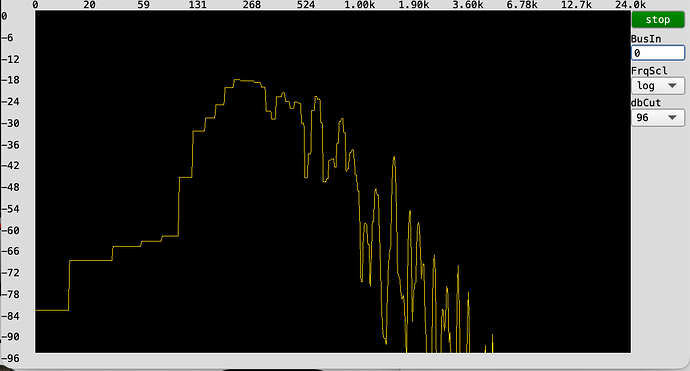

I need this to work for electric guitar signals which are very harmonic, with only a little bit of in-harmonic information at the attack phase of the signal. Here I am stacking sinewaves to emulate the sound of the guitar although in reality the amplitudes decay differently from this abstraction. ]

How could I go about this?

// Create two buffers for testing - a is monophonic - b is polyphonic

(

a = Buffer.alloc(s, 0.5 * s.sampleRate);

b = Buffer.alloc(s, 0.5 * s.sampleRate);

{

var chord = [57, 61, 64]; // A major

var env = Env.perc(0.2, 0.3).ar;

var tone = {|midinote| 8.collect{|i| SinOsc.ar(midinote.midicps * (i + 1), 0, 0.1 * 1/(i+1)) }.sum };

RecordBuf.ar([chord[0]].collect(tone.(_)).sum * env, a, loop: 0, doneAction: 2);

RecordBuf.ar(chord.collect(tone.(_)).sum * env, b);

0

}.play

)

// inspect

a.play // the note A

b.play // the chord A major

{ [PlayBuf.ar(1, a), PlayBuf.ar(1, b) ] }.plot(0.5)

// how to determine which one is polyphonic and which is monophonic?