hmmm… maybe IOhannes has some more ideas

I still think it would be helpful to see the Pd test that @eckel is running.

There is a suggestion in this thread that SuperCollider is performing worse than something in Pd, but it hasn’t been established that these two scenarios are comparable.

I just tried to set up two scenarios that I know to be compatible.

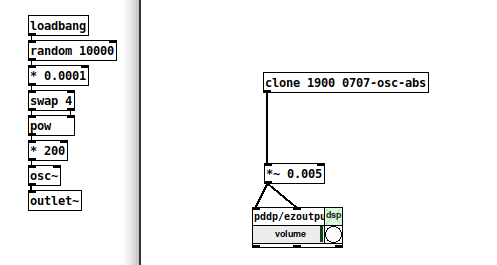

Pd:

On my machine, with the performance governor, and the built-in sound card at 256 samples:

- 4000 sines = 70% CPU = quite glitchy.

- 3500 sines = 62% CPU = mostly OK but still some occasional glitches in the audio.

Also, with 4000 sines, JACK’s xrun count does not increase with audible glitches. This makes sense, since Pd’s audio callback is not calculating the audio.

This means, among other things, that it may not be meaningful to say “Pd can go up to 93% CPU without xrun-ning.” Because of Pd’s design, it seems to me you’d get audio glitches before you get xruns (and that’s what happened on my machine).

SC:

(

SynthDef(\sin10, { |out|

Out.ar(out,

(SinOsc.ar(

NamedControl.kr(\freq, Array.fill(10, 440))

).sum * 0.005).dup

)

}).add;

)

n = Array.fill(400, { Synth(\sin10, [freq: Array.fill(10, { exprand(200, 800) })]) });

n.do(_.free);

4000 sines in SC clocks in at about 45% CPU. It glitches occasionally – I think slightly less often than Pd, but it’s hard to quantify that. In terms of audio glitches, they are basically neck and neck.

So my result is: on an Ubuntu-Studio-tuned system, with benchmarking cases that are designed to be as close to identical as possible, I can run roughly the same amount of DSP in both Pd and SC. The CPU numbers are reported differently, but the only thing that really matters is how much DSP can I get away with.

I think we have not ruled out the possibility that the initial results posted here were from an invalid test design.

hjh

That’s certainly true! Jack xruns are not the only relevant measurement here.

IMO, the more interesting - and disturbing - issue is that running the Server from the IDE would somehow significantly degrade performance, at least for @eckel. Maybe it would be good to create a new seperate question/issue for that.

I wonder what would happen if one starts the server from sclang in the terminal and then communicate with it from a separate IDE session. If there is a difference, perhaps we could simply circumvent the problem for now by just starting things differently?

Thank you for providing your test code. I can run the 4000 sines without xruns if I use 256 frames/period and 2 periods/buffer. The CPU load is then 25%. If I run it with 128 frames/period I get xruns synchronised with the update of the CPU load in the IDE (every 0.7 seconds).

I think this is a good idea. I will prepare that.

Out of curiosity: do the active SC developers read this forum? I think we should try to get their attention. Maybe taking a closer look at the code in SC_Jack.cpp could help? Is there anybody out there who is experienced with Jack programming? I am unfortunately not.

I haven’t tried that yet but from what I have experienced so far, any windowed application can trigger the xruns (e.g. when resizing the window), the IDE just being one among them. When I was running sclang and scsynth headless, I also noticed xruns when the screen dimmed a while after I had logged out and had logged on in the meantime from another machine via ssh.

For me the main question is why my Jack test client can run at a much higher load without creating xruns triggered by windowed applications as compared to scsynth.

Right, so the fact that running things from sclang in the terminal yielded a different result was not because the IDE is doing something particular or starting the server differently, but just because it is updating a GUI window at all (you are thinking something with the CPU load numbers?). Have I understood you correctly?

How about this… run sclang from the command line (no IDE), boot the server with alive thread, and run the same 4000-synth test.

So then the server is processing /status messages from sclang, but there are no Qt view updates associated with it.

If it still glitches, then /status is the problem. (I don’t expect this.)

If it doesn’t glitch, then the GUI update is the problem. (I lean toward this explanation.)

hjh

Yes, in certain threshold settings, the update of the CPU load numbers in the IDE is synced to the xruns. I double-checked this also by disabling these updates in the source code an recompiling the IDE.

In the meantime I also ran the example by @jamshark70 with 12000 sines without xruns using 2048 as blocksize. This generated a load of 75%, exactly 3 times of the load I get for 4000 sines, which run xrun free at a block size 256. When using 128 as block size in this case is when the xruns where synced with the CPU update.

For me the question is why scsynth needs so much more processing headroom in order to avoid being late for Jack than other Jack clients.

Yes, this is what happens. You had the good intuition!

Yes! My earlier reply came out of sequence to your question.

|

- | - |

but the newer machine was much more prone to hitting xruns.

I had exactly the same experience, even the dates, t440 vs t490, the latter is a performance disaster in so many aspects.

First time posting here.

As this thread pops up very high when searching for “xrun supercollider” on google I would like to add my 5 cents to that. I have had a lot of xrun issues on my Linux Mint 20.2 Cinnamon runnning SC and jackd, but have fixed that to fit my needs. Took a long time to to pinpoint the issues so hopefully this might be of some help to someone else.

As stated above rtcqs rtcqs/rtcqs: rtcqs, pronounced arteeseeks, is a Python port of the realtimeconfigquickscan project. - rtcqs - Codeberg.org is a really good tool to run to see what might be causing issues.

For me switching my cpu scaling governor(also mentioned above) from “powersave” to “performance” using cpufreq-set made a big impact. You can check the settings using

cat /sys/devices/system/cpu/cpu*/cpufreq/scaling_governor

The tool cpufreq-set comes with cpufrequtils.

sudo apt-get install cpufrequtils

and then

sudo cpufreq-set --cpu 0 -g performance

There is a -r “related” flag which is supposed to set all cores at once but I did not get it to work, so have to do all cores by hand, 0-3 in my case

Also starting jackd with -P 95 (setting the realtime priority). And the alsa backend with a bit higher period -p 2048. This makes for higher latency but that is ok for my current usage.

This is my settings for starting jackd

/usr/bin/jackd -P 95 -d alsa -r 48000 -p 2048 -n 2

-r defaults to 48000 so I think you can probably omit that.

Was running a piece which was xrun:ing on me constantly before and can now run it for an hour+ without issues.

Btw my kernel is 5.4.0-91-generic and the cpu: Intel© Core™ i7-7567U CPU @ 3.50GHz × 2