I appreciate the thorough approach above, but perhaps that’s for a different thread?

This thread is about a very specific case. The idea is to produce a running average – not a moving average (where SC already has the incorrectly-named RunningSum, which you can divide by the window size to get a moving average) – a true running average, which keeps accumulating indefinitely.

I was using FOS to try to achieve this, because a moving average can be implemented as a first-order recursive filter:

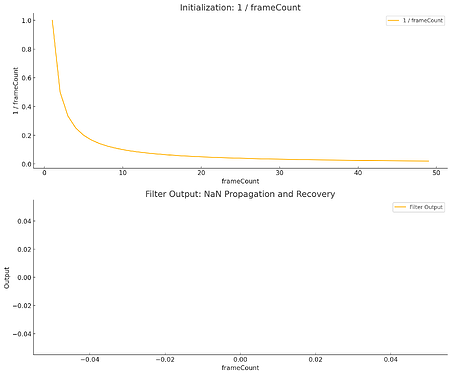

moving_avg(x(n)) = (1/n) * x(n) + (1 - 1/n) * y(n-1)

I.e., a0 = 1/n, b1 = 1 - (1/n).

The answer to the title question has two parts:

First, 1/0 and 0.reciprocal return different results in the server (while returning the same result in the language):

[0.reciprocal, 1/0];

-> [inf, inf]

s.boot;

(

f = { |sig|

{

var trig = Impulse.ar(0);

Poll.ar(trig, sig.value);

Silent.ar(1)

}.play;

};

f.({ var x = DC.ar(0); [x.reciprocal, 1/x] });

)

UGen(UnaryOpUGen): -nan

UGen(BinaryOpUGen): inf

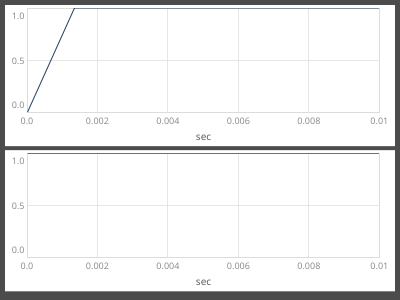

Second, that 0.reciprocal should never be entering FOS at all. The first sample, y(0), of Sweep.ar(trig, SampleRate.ir) is 1.0. Thus it can be entering FOS only in the Ctor. My opinion is that it’s a remaining bit of sloppiness in the SC plugin interfaces, that the Ctor “pre-sample” currently is either y(0) or y(-1), at the whim of each plug-in, which of course has no idea which value is expected downstream. The only way that you can have a consistent expectation downstream is if that initial value being produced is consistent.

The NaN’s here are not FOS’s fault. They’re Sweep’s fault.

THEN… having resolved that by forcing audio rate coefficients, which causes the 0.reciprocal to be discarded before it affects FOS results, I found that FOS’s behavior does not match the documented “equivalent” formula.

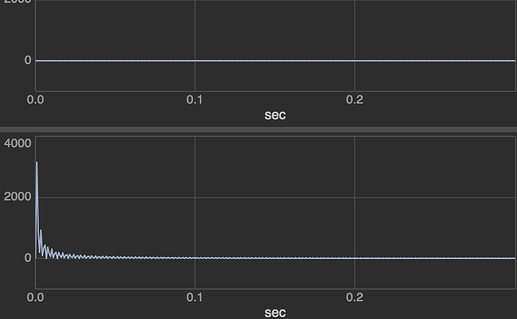

I guess the UGen is trying to do a DR-II or TDR-II formula, to save one delay register, but the formula must be written incorrectly.

double y0 = in + b1 * y1;

ZOUT0(0) = a0 * y0 + a1 * y1;

y1 = y0;

a0 * y0 + (a1 * y1) expands to (a0 * in) + (a0 * b1 * y1) + (a1 * y1) which reduces to (a0 * in) + ((a0 * b1 + a1) * y1) – now here, I’ll admit that my math isn’t good enough – but in the specific running-average case, first a0 = 1, first b1 = 0. So, first cycle, y0 = in(0), output = y0 * 1 + 0 * 0 = in(0), and second cycle starts with y1 = in(0). Then new a0 = 0.5, new b1 = 0.5, new y0 = in(1) + 0.5 * in(0), new out = 0.5 * (in + 0.5 * in(0)) so the feedback coefficient here is really 0.25, NOT 0.5 as supplied.

This can’t be right.

So I’d like to suggest that the purpose of this thread be to identify what is wrong with the formula that is causing this non-equivalent behavior. General filter safety is a different problem.

hjh