I’ve been reading a lot of critiques recently of the Industrial Revolution. I’d previously been a bit dismissive of these ideas, thinking they were some forlorn hope attempt to stop industrialisation. I realise they are more subtle. This was all provoked by going to a visual arts exhibition that showed the influence of the Industrial Revolution on fine arts. Two people in particular I am researching, John Ruskin and Frank Loyd Wright, both of whom advocated the influence of nature on architectural design and art. Frank Loyd Wright called this ‘organic architecture’, long before Whole Foods embraced the terms to sell mangos. Even though at a glance his architecture might look quite angular, his approach was more subtle.

This preamble serves a point. I’m not simply treating this as a technical problem but as a method of exploration. The critique that has really hit me is the use of straight lines, which rarely if ever appear in nature (light I suppose is an exception). This made me realise that straight lines are everywhere in electronic sound. Even when we use something like an LFO, it is modulating around a straight line. When oscillators are detuned, they are essentially detuned into straight parallel lines.

What strikes me is: why are we doing this? A string quartet wouldn’t dream of playing music this way. The fact that percussionists often have to play like this, is not a strength of percussion. It seems like something that is built into our engineering practice because it’s simpler and it’s more akin to how we think of how computers work.

I am keen to carry out some experiments. I thought I would put the question out there into this community, as other people may have thought, or be thinking, along similar lines, and likewise, there may be critiques of my critique.

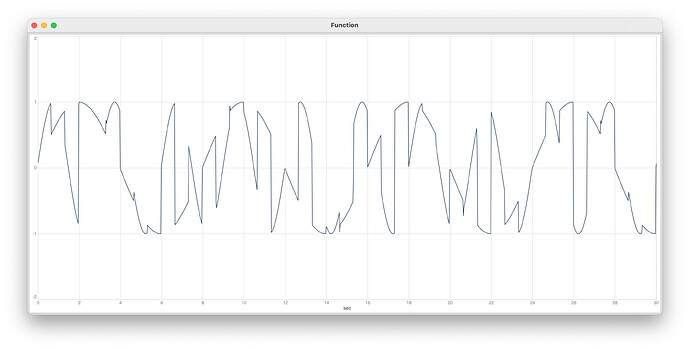

My question with this is where to start with working with lines that have curves that do not repeat in periodic cycles? I am immediately drawn to the idea of Perlin noise for which there is a Ugen. However, I also thought perhaps some forms of randomisation with an Env might work as well. If it is of interest to people, I will try to post some results here. Please share any ideas or thoughts you have on this subject.