In the project I’m currently working on, I will have to move some sound sources in a multi-channel setup of about 40 speakers arranged more or less evenly along the walls of a corridor about ten meters long.

Some 20 speakers will therefore be arranged on the left wall and the same number along the right wall. The speakers will be positioned at different heights between 50 and 180 cm.

I think this setup could fall under the name shoebox setup (or at least irregular array of speakers).

I would need a decoder/panner, which, given the x, y and z position of the sound source, would be able to send the respective appropriately compensated audio signal to the different speakers.

Personally, even if the possibility exists, I would discard the option of an ambisonic panner/decoder, precisely because:

- there is no real preferential listening sweet-spot (the listener is free to move around and walk down the corridor);

- it is not a regular ring or dome/semi-dome setup;

I would prefer instead a ‘simplified’ approach such as the VBAP vector panning system could provide. However, I don’t think VBAP is for me either, since, AFAICK, even VBAP assumes that the drivers are arranged in a regular periphonic or pantophonic setup.

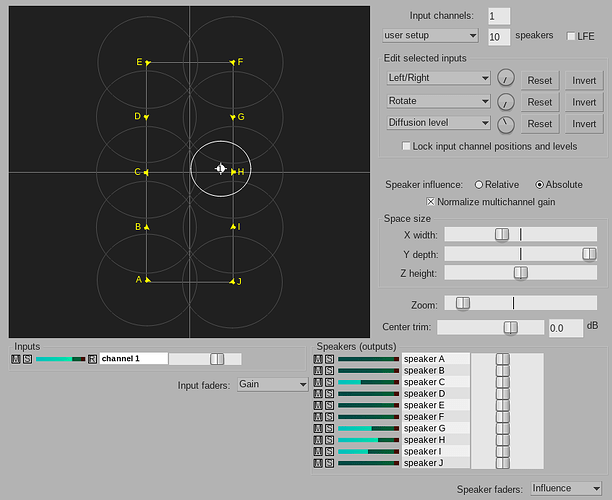

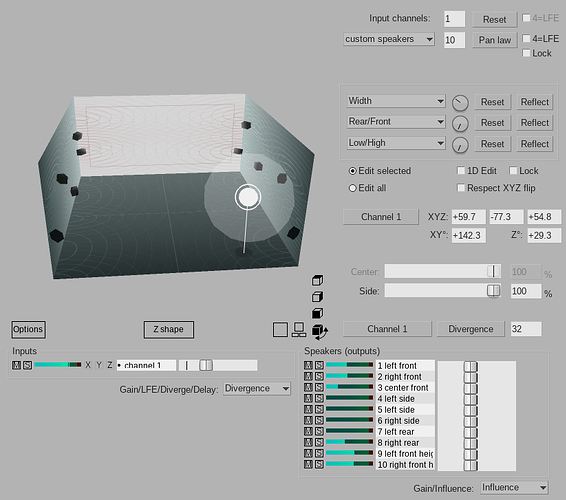

A system that would be useful to me instead, would be the one that is implemented within Reaper’s ReaSurround or ReaSurroundPan plugins, where among other things controls such as the influence of each speaker or the diffusion/divergence level of the source are possible.

Below two images where, as a demonstration, I have placed 5+5 speakers and a mono sound source.

Note: in my setup every speakers is pointing towards the opposite wall, instead of pointing to the coordinate system center.

Which approach do you think would work best?

Is there insider SC a decoder/panner which will do the job out-of-the-box?

In case not, is there any externals i can use?

Thank you so much, as always, for your help and suggestions