This morning, I toyed with optimizing Eli Sam’s Maths2 pseudo-UGen, and this got me thinking about cases like the following:

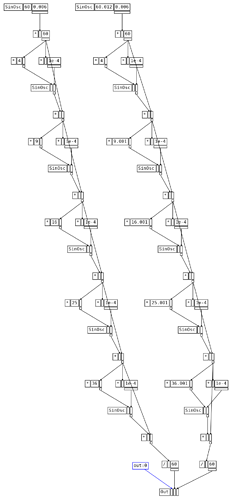

SynthDef(\test, { |out|

var sig = SinOsc.ar(440.dup, 0, 0.1);

Out.ar(out, sig);

}).dumpUGens;

[ 0_Control, control, nil ]

[ 1_SinOsc, audio, [ 440, 0 ] ]

[ 2_*, audio, [ 1_SinOsc, 0.1 ] ]

[ 3_SinOsc, audio, [ 440, 0 ] ]

[ 4_*, audio, [ 3_SinOsc, 0.1 ] ]

[ 5_Out, audio, [ 0_Control[0], 2_*, 4_* ] ]

Ooh, fun, both 3 and 4 are redundant.

I think we could catch these cases:

-

Add a

UGen:isDeterministicflag. (OK, big job to go through hundreds of UGen classes.) -

Add to UGen,

isEquivalent { |that| ... }which returns true if a and b are the same class, and a is deterministic (which implies b to be deterministic as well), and a’s inputs are identical to b’s inputs.- Nondeterministic UGens, even with identical inputs, would have to fail this test.

-

For every UGen that is created, if there is a previously-created equivalent UGen, dump the new one and substitute the old one.

- If this scans linearly through the existing array, execution time would be proportional to N^2, which is bad.

- That could be optimized by keeping an IdentityDictionary of UGen classes, so that a new SinOsc would scan only previously created SinOscs. One step further would be to key *aryOpUGens by the operator name instead of the class, so that

+operators would scan only other+s and not*s.

Just throwing it out there for discussion for now. I have a show in about a week so I don’t have time to work on this right at the moment. But, I think this might be good, especially if the performance impact could be kept to a minimum. The SinOsc.ar(440.dup) case is pretty common, and even experienced users sometimes write the same a * b 10 times in 15 lines. If the system could automatically streamline many of these, I think that would be good.

Comments?

hjh