Hello, I hope this finds everyone well.

I’m still trying to assimilate much of the above info and examples. Incredibly helpful!

I have an example set of code here that I’m using just for audio recording. I’ve actually modified some code (mentioned above) that was written to record ProxySpace nodes. That was working well until I ran into some issues with ProxySpace itself.

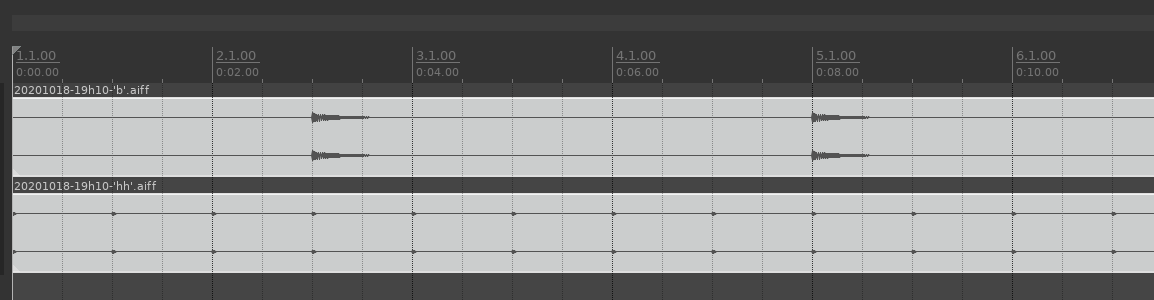

I modified that Class to work with Ndefs, which seems to work appropriately for the most part - but once again, when I bring these files into another app with the same tempo settings, everything is slightly off the grid.

I can’t at this point tell what I am misunderstanding. Is it latency or something I’m doing wrong with Quantization? If anyone can help I’d be much obliged.

P.S. @droptableuser - using the snippet you showed above a few months ago with a straight multi-channel record seem to have the same results.

I can’t tell if it’s my patterns running off somehow, or not quantized or if it is something larger.

This runs a straight beat at 120 bpm, tempo 2 - but in any case the files are in sync together but with some sort of offset, again, maybe latency or me just doing something wrong. I’ve been in the docs, just can’t find what I’m missing.

Class:

NdefRecorder

NdefRecorder {

var <nodes;

var <>folder;

var <>headerFormat = "aiff", <>sampleFormat = "float";

/* var dateTime = Date.getDate.format("%Y%m%d-%Hh%m");

dateTime.postln;*/

*new { |subfolder = nil |

^super.newCopyArgs().init(subfolder)

}

init { | subfolder = nil |

nodes = ();

if(subfolder != nil,

{folder = Platform.userAppSupportDir +/+ "Recordings" +/+ Document.current.title +/+ subfolder },

{folder = Platform.userAppSupportDir +/+ "Recordings" +/+ Document.current.title }

);

File.mkdir(folder);

}

free {

nodes.do(_.clear);

nodes = nil;

}

add { |proxies|

this.prepareNodes(proxies);

{ this.open(proxies) }.defer(0.5);

}

prepareNodes { |proxies|

proxies.do{ |proxy, i|

var n = Ndef(proxy);

n.play;

n.postln;

nodes.add(

i -> RecNodeProxy.newFrom(n, 2)

);

}

}

open { |proxies|

proxies.do{ |proxy, i|

var dateTime = Date.getDate.format("%Y%m%d-%Hh%m");

var fileName = ("%/%-%.%").format(

folder, dateTime, proxy.asCompileString, headerFormat

);

nodes[i].open(fileName, headerFormat, sampleFormat);

}

}

record { |paused=false|

nodes.do(_.record(paused, TempoClock.default, -1))

}

stop {

this.close

}

close {

nodes.do(_.close)

}

pause {

nodes.do(_.pause)

}

unpause {

nodes.do(_.unpause)

}

closeOne { |node|

}

}

My Simple Ndef Test

(

SynthDef(\bplay,

{arg out = 0, buf = 0, rate = 1, amp = 0.5, pan = 0, pos = 0, rel=15;

var sig,env=1;

sig = Mix.ar(PlayBuf.ar(2,buf,BufRateScale.ir(buf) * rate,1,BufDur.kr(buf)*pos*44100,doneAction:2));

env = EnvGen.ar(Env.linen(0.0,rel,0),doneAction:0);

sig = sig * env;

sig = sig * amp;

Out.ar(out,Pan2.ar(sig.dup,pan));

}).add;

)

(

Ndef(\hh).play;

Pdef(\hhmidi,

Pbind(

\type, \midi,

\midiout, m,

\midicmd, \noteOn,

\chan, 1,

));

Pdef(\hhsynth,

Pbind(

\instrument, \bplay,

\out, Pfunc(Ndef(\hh).bus.index),

\group, Pfunc(Ndef(\hh).group),

\buf, d["Hats"][1],

));

Pdef(\hhseq,

Pbind(

// \dur, Pseq([0.25, 0.25, 0.5, 0.77, 0.25].scramble, inf),

// \dur,Pbjorklund2(Pseq(l, inf).asStream,12,inf)/8,

\dur, 0.125,

));

)

(

Pdef(\hh,

Ppar([

Pdef(\hhmidi),

Pdef(\hhsynth),

])

<> PtimeClutch(Pdef(\hhseq))

);

)

Pdef(\hh).play(quant: -1);

Pdef(\hh).stop;

(

TempoClock.default.tempo = 2;

~ndefr = NdefRecorder.new('test');

~ndefr.add([\hh]);

)

~ndefr.record;

~ndefr.stop;

One other note about all of the Pdefs - I’m attempting to capture MIDI as well from a pattern and was helped with this on another thread. That also may be the issue.