This is related to this topic.

I’d like to scope signals with SC, either internal or external ones. Now I know ScopeView exists, but unfortunately, it seems C++ implemented, which I don’t really want to touch. I’d like to be able to display RMS/LUFS, maybe some other stuff like differences between signals, etc.

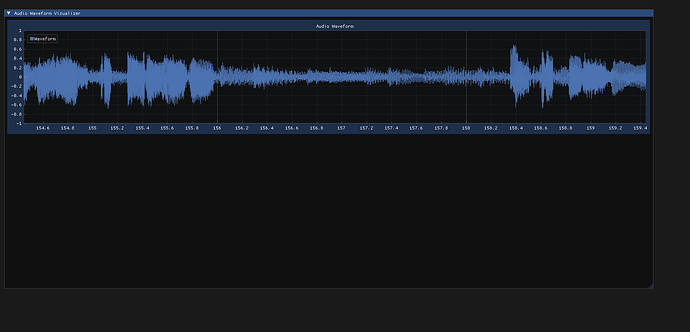

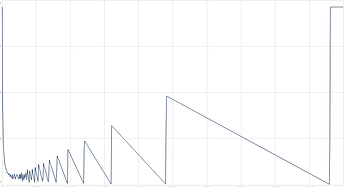

Here’s a working prototype, which works better than expected. For now, only with external sources, and one sample per pixel. I need to figure out what’s the best way to display data when view is zoomed out. If anyone knows how software like Audacity do, this would help me?

To anyone interested,

Regards,

Simon

(

// Parameters

var numChannels = 2;

var plotMaxLength = 2.0;

var externalSource = true;

var inputChannel = 0;

// Advanced parameters

var latencyOffset = 1.2;

var server = s;

var chunkSize = 1024;

var rmsWindow = 0.3;

var numChunks = 8;

var viewFps = 60;

// Internal

var win = Window(), view = UserView();

var oscFunc, synth, buffer, bus;

var peaks, rms;

var initBuffer, onBufferReady, updateData, initUI;

SynthDef(\externalScopeMono, { |in, out, chunkSize, numChunks|

var input = SoundIn.ar(in);

var phase = Phasor.ar(0, 1, 0, chunkSize);

var trig = HPZ1.ar(phase) < 0;

var partition = PulseCount.ar(trig) % numChunks;

BufWr.ar(

input,

out,

phase + (chunkSize * partition)

);

SendReply.ar(

trig,

'/bufferUpdate',

partition

);

}).add;

SynthDef(\internalScopeMono, { |in, out, chunkSize, numChunks|

var input = In.ar(in);

var phase = Phasor.ar(0, 1, 0, chunkSize);

var trig = HPZ1.ar(phase) < 0;

var partition = PulseCount.ar(trig) % numChunks;

BufWr.ar(

input,

out,

phase + (chunkSize * partition)

);

SendReply.ar(

trig,

'/bufferUpdate',

partition

);

}).add;

SynthDef(\externalScopeStereo, { |in, out, chunkSize, numChunks|

var input = SoundIn.ar([in, in + 1]);

var phase = Phasor.ar(0, 1, 0, chunkSize);

var trig = HPZ1.ar(phase) < 0;

var partition = PulseCount.ar(trig) % numChunks;

BufWr.ar(

input,

out,

phase + (chunkSize * partition)

);

SendReply.ar(

trig,

'/bufferUpdate',

partition

);

}).add;

SynthDef(\internalScopeStereo, { |in, out, chunkSize, numChunks|

var input = In.ar([in, in + 1]);

var phase = Phasor.ar(0, 1, 0, chunkSize);

var trig = HPZ1.ar(phase) < 0;

var partition = PulseCount.ar(trig) % numChunks;

BufWr.ar(

input,

out,

phase + (chunkSize * partition)

);

SendReply.ar(

trig,

'/bufferUpdate',

partition

);

}).add;

initUI = {

view

.animate_(true)

.frameRate_(viewFps)

.drawFunc_({

var ySize = view.bounds.height / numChannels;

Pen.strokeColor_(Color.red);

view.bounds.width.do({ |index|

numChannels.do({ |chan|

Pen.moveTo(

Point(

index,

ySize * chan + (ySize / 2)

);

);

Pen.lineTo(

Point(

index,

ySize * chan + (ySize / 2)

- (

(ySize / 2)

* peaks[index * numChannels + chan]

)

);

);

Pen.stroke;

});

});

});

};

updateData = { |data|

peaks = peaks.rotate(data.size.neg);

data.do({ |value, index|

peaks[peaks.size - data.size + index] = value;

});

};

onBufferReady = { |b|

// Reference buffer

buffer = b;

// Wait for the SynthDefs to load

s.sync;

switch(numChannels)

{ 1 } {

if(externalSource) {

synth = Synth(\externalScopeMono, [

\in, inputChannel,

\out, buffer,

\chunkSize, chunkSize,

\numChunks, numChunks

]);

} {

synth = Synth(\internalScopeMono, [

\in, bus,

\out, buffer,

\chunkSize, chunkSize,

\numChunks, numChunks

]);

};

}

{ 2 } {

if(externalSource) {

synth = Synth(\externalScopeStereo, [

\in, inputChannel,

\out, buffer,

\chunkSize, chunkSize,

\numChunks, numChunks

]);

} {

synth = Synth(\internalScopeStereo, [

\in, bus,

\out, buffer,

\chunkSize, chunkSize,

\numChunks, numChunks

]);

};

};

oscFunc = OSCFunc(

{ |msg|

var partition = (msg[3] - 1) % numChunks;

buffer.getn(

partition * (chunkSize * numChannels),

(chunkSize * numChannels),

updateData

);

},

'/bufferUpdate',

server.addr

);

};

initBuffer = {

Buffer.alloc(

server,

chunkSize * numChunks,

numChannels,

onBufferReady

);

};

// Boot server

server.waitForBoot({

peaks = Array.fill(

(

plotMaxLength

* server.sampleRate

* numChannels

).roundUp.asInteger,

{ 0 }

);

rms = Array.fill(

(

plotMaxLength

* server.sampleRate

* numChannels

).roundUp.asInteger,

{ 0 }

);

initUI.value;

initBuffer.value;

});

win.layout_(

VLayout()

.margins_(0)

.spacing_(0)

.add(view)

);

win.onClose_({

synth.free;

buffer.free;

oscFunc.clear;

if(externalSource.not) {

bus.free;

};

});

CmdPeriod.doOnce({

win.close;

});

win.front;

)